What is Symbolic AI? A concise framing

In an era dominated by deep learning and large language models, the principles of Symbolic AI are experiencing a significant resurgence. Also known as “Good Old-Fashioned AI” (GOFAI), Symbolic AI is a branch of artificial intelligence research that focuses on manipulating high-level, human-readable representations of problems, logic, and knowledge. Instead of learning patterns from vast amounts of statistical data like neural networks, it operates on explicit symbols and rules.

Think of the difference this way: a neural network learns to identify a cat by analyzing thousands of cat images and identifying statistical patterns in pixels. A Symbolic AI system, on the other hand, identifies a cat by reasoning over a set of explicit rules and facts, such as “a cat is a mammal,” “mammals have fur,” and “this entity has fur,” to logically deduce that it is a cat. This fundamental difference in approach gives Symbolic AI unique strengths, particularly in domains requiring transparency, formal verification, and structured reasoning.

Foundational Concepts and Historical Milestones

The foundations of Symbolic AI are rooted in mathematical logic, philosophy, and computer science. It was the dominant paradigm for decades, leading to the development of expert systems, logic programming, and semantic technologies that remain influential today.

Logic, Rules and Formal Reasoning

At its core, Symbolic AI uses formal logic to represent the world and reason about it. This involves:

- Propositional and First-Order Logic: These are formal languages used to make statements (propositions) about objects and their relationships. For instance, a rule might be expressed as ∀x (IsARobot(x) → MustObeyLaws(x)), meaning “For all x, if x is a robot, then x must obey the laws.”

- Inference Engines: These are the “brains” of a symbolic system. An inference engine is a software component that applies logical rules to an existing knowledge base to deduce new information. It can use techniques like forward chaining (starting with known facts to derive new conclusions) or backward chaining (starting with a goal and working backward to find supporting facts).

- Rule-Based Systems: Also known as expert systems, these models use a set of “IF-THEN” rules created by domain experts to solve problems. They were successfully applied in fields like medical diagnosis and financial analysis.

Knowledge representation and ontologies

How knowledge is structured is paramount in Symbolic AI. Knowledge representation is the field dedicated to representing information about the world in a form that a computer system can utilize to solve complex tasks.

- Ontologies: These are formal specifications of a domain’s concepts, properties, and the relationships between them. They provide a shared vocabulary and a structural framework for organizing knowledge. Web Ontology Language (OWL) and Resource Description Framework (RDF) are standard languages for creating ontologies.

- Knowledge Graphs: A practical application of these principles, knowledge graphs represent entities (nodes) and their relationships (edges) in a graph structure. They power services like Google’s search results and are essential for providing context to machine learning models.

Where Symbolic Approaches Excel and Where They Falter

No single AI paradigm is a silver bullet. Understanding the trade-offs between symbolic and connectionist (neural) approaches is key to building effective, robust systems.

| Strengths of Symbolic AI | Weaknesses of Symbolic AI |

|---|---|

| Explainability and Transparency: The reasoning process is explicit and auditable. You can trace every conclusion back to the specific rules and facts used to derive it. | Brittleness: Symbolic systems struggle with ambiguity, noise, or incomplete information. If a situation doesn’t perfectly match a pre-defined rule, the system may fail. |

| Formal Verification: Because it is based on formal logic, a symbolic system’s behavior can be mathematically proven to be correct with respect to its specifications, which is critical for safety-critical applications. | Knowledge Acquisition Bottleneck: Manually encoding complex domain knowledge into explicit rules is a time-consuming, expensive, and difficult process that requires deep domain expertise. |

| Data Efficiency: It can reason effectively from a small set of high-quality facts and rules, whereas neural networks often require massive datasets for training. | Scalability: As the number of rules and facts grows, the computational complexity of logical inference can become intractable. |

| Compositionality: Symbolic representations are inherently compositional, meaning complex concepts can be built from simpler ones, enabling systematic generalization. | Poor Perceptual Capabilities: It is not well-suited for processing raw, unstructured data like images, audio, or natural language text directly. |

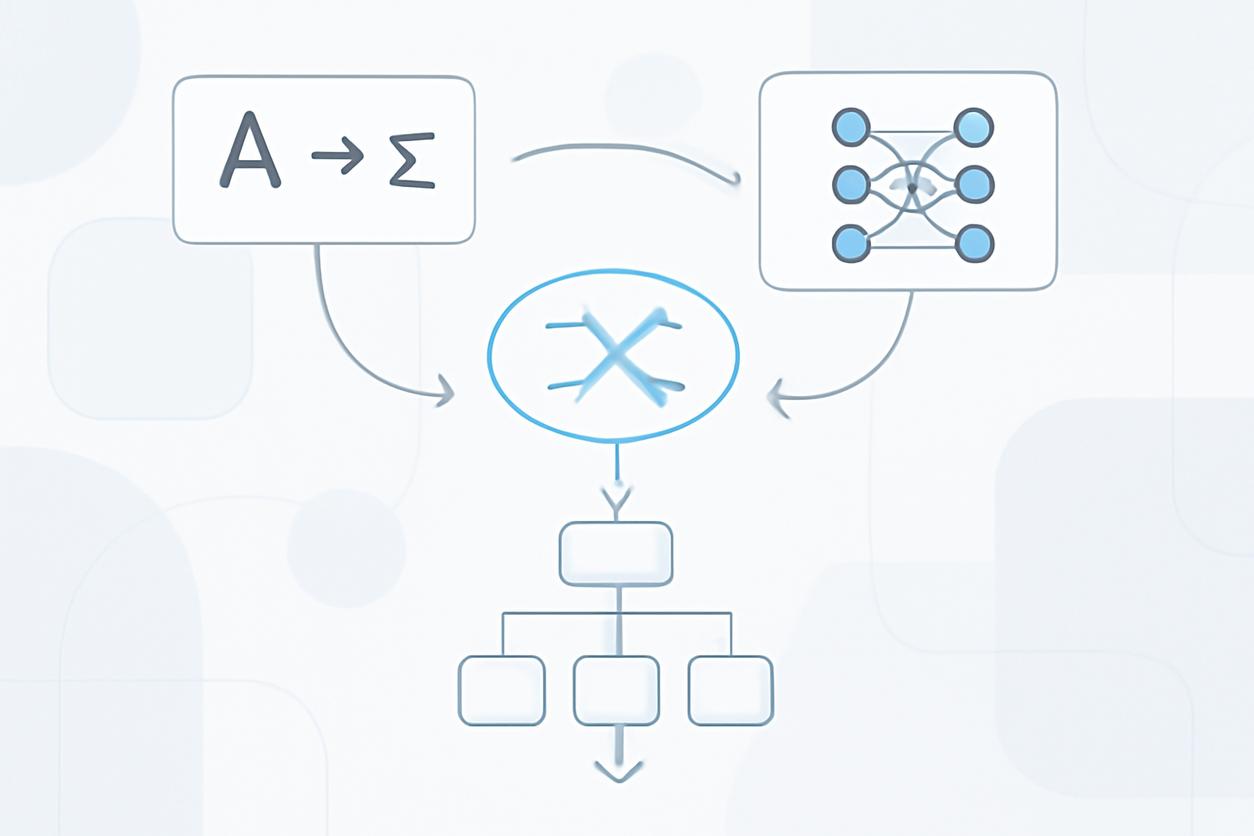

Neuro-symbolic Architectures: Bridging Symbols and Vectors

The future of sophisticated AI lies not in choosing one paradigm over the other, but in their synthesis. Neuro-symbolic AI aims to combine the robust learning capabilities of neural networks with the reasoning and transparency of Symbolic AI. This hybrid approach mitigates the weaknesses of each while amplifying their strengths. For more academic detail, consult this neuro-symbolic integration overview.

Design patterns for hybrid systems

Several integration patterns are emerging for building these hybrid systems, with forward-looking strategies for 2025 and beyond focusing on modularity and explainability:

- Neural-to-Symbolic (Perception to Reasoning): A neural network first processes unstructured data (e.g., text or images) to extract structured information or symbolic representations. A symbolic reasoner then uses this output to perform high-level reasoning, planning, or validation.

- Symbolic-to-Neural (Knowledge-Guided Learning): Symbolic knowledge, such as a knowledge graph or a set of logical constraints, is used to guide the training of a neural network. This can improve data efficiency, enforce constraints, and lead to more generalizable models.

- Symbolic in the Loop (Co-Reasoning): Both systems work iteratively. A neural model might propose a solution, which a symbolic system critiques or refines. This feedback loop allows the system to correct its own errors and explore a solution space more effectively.

Example workflows and data flows

Consider a financial compliance system designed to detect money laundering. A purely neural approach might flag transactions based on learned patterns but struggle to explain *why* a transaction is suspicious in regulatory terms. A neuro-symbolic workflow would be far more robust:

- Data Ingestion: Transaction data (amounts, parties, locations) flows into the system.

- Neural Pattern Recognition: A trained neural network analyzes the data, identifying anomalous patterns and flagging potentially suspicious transactions. It excels at this fuzzy, pattern-matching task.

- Symbolic Extraction: The flagged transaction data is converted into a symbolic representation (e.g., facts like `Transaction(T1, Sender_A, Receiver_B, 15000_USD, HighRisk_Country)`).

- Symbolic Reasoning: An inference engine applies a formal rule-set based on anti-money laundering (AML) regulations (e.g., “IF Transaction_Amount > 10000_USD AND involves HighRisk_Country THEN Flag_For_Review”).

- Explainable Output: The system outputs not just a flag, but a clear, human-readable explanation: “Transaction T1 flagged because it exceeds the $10,000 threshold and involves a high-risk jurisdiction, violating AML Regulation 3.1a.”

Implementing Symbolic Components Today

Integrating Symbolic AI into modern tech stacks is more accessible than ever, thanks to a variety of mature and emerging tools.

Languages, libraries and tooling overview

- Logic Programming Languages: Prolog remains the quintessential language for symbolic reasoning, along with dialects like Datalog. Its declarative nature makes it ideal for expressing complex rules.

- Python Libraries: For teams working within the Python ecosystem, libraries like `SymPy` (for symbolic mathematics) and various Datalog or Prolog implementations (e.g., `pyDatalog`) allow for the integration of rule-based components directly into data science workflows.

- Knowledge Graph Technologies: Tools like Neo4j (graph database) and libraries for handling RDF (e.g., `rdflib` in Python) are essential for building and querying the knowledge representations that fuel symbolic reasoning.

Integration with neural pipelines

Connecting a symbolic component to a neural pipeline typically involves a well-defined interface. A common approach is to use a REST API. The neural model (e.g., a PyTorch-based microservice) can make a call to a symbolic reasoning service (e.g., a Prolog-based API) by passing structured data like JSON. The symbolic service executes its logic and returns a structured response, including the decision and the reasoning trace, which can then be consumed by the downstream application.

Evaluation, Benchmarking and Metrics

Evaluating a symbolic or neuro-symbolic system requires going beyond standard machine learning metrics like accuracy or F1-score. Key evaluation criteria include:

- Logical Soundness and Completeness: Does the system only derive valid conclusions (soundness)? Can it derive all valid conclusions (completeness)?

- Quality of Explanation: How clear, concise, and useful is the explanatory trace provided by the system? This can often be measured via user studies.

- Robustness to Adversarial Inputs: How well does the system handle inputs designed to fool it? Symbolic constraints can often make a system more robust.

- Computational Efficiency: How does the reasoning time scale with the size of the knowledge base and the complexity of the query?

Governance, Transparency and Ethical Considerations

The black-box nature of many deep learning models presents significant challenges for governance, fairness, and accountability. This is where Symbolic AI provides immense value. Because its decision-making process is transparent, it facilitates:

- Auditing and Compliance: Regulators can audit the system’s logic to ensure it complies with laws and standards.

- Bias Detection and Mitigation: Biased rules can be identified and corrected directly, rather than trying to retrain a massive model on a “de-biased” dataset.

- User Trust: When a system can explain its reasoning (e.g., “Your loan was denied because your debt-to-income ratio exceeds 40%, per rule L-27B”), users are more likely to trust and accept its decisions.

Practical Case Studies and Scenarios

Neuro-symbolic approaches are already creating value in complex domains:

- Advanced Medical Diagnostics: A system combines a convolutional neural network (CNN) for analyzing medical scans (X-rays, MRIs) with a medical knowledge graph. The CNN identifies potential anomalies, and the symbolic reasoner cross-references these findings with the patient’s history and a vast ontology of diseases and symptoms to provide a differential diagnosis with supporting evidence.

- Autonomous Robotics and Planning: A robot uses deep learning for object recognition and navigation but relies on a symbolic planner to make high-level decisions. For example, it might use logic to ensure its planned path never violates safety constraints, like crossing a designated hazardous zone.

- Scientific Discovery: Researchers can use a system that ingests scientific papers with a neural language model and extracts key findings as symbolic facts. A symbolic reasoner can then search for novel connections and formulate new hypotheses by combining information across thousands of papers.

Research Frontiers and Open Challenges

The field of Symbolic AI and its integration with neural networks is vibrant and active. Key open challenges include:

- Scalable Reasoning: Developing new algorithms that can perform logical inference over massive knowledge graphs efficiently.

- Automated Knowledge Acquisition: Creating better methods for automatically extracting high-quality symbolic knowledge from unstructured data, thus overcoming the knowledge acquisition bottleneck.

- Commonsense Reasoning: Endowing AI systems with the vast background knowledge and implicit understanding of the world that humans possess.

- Learning Symbolic Rules: Developing neural architectures that can not only follow rules but also learn new, explicit symbolic rules from data, effectively learning to reason.

Key Takeaways and Further Resources

The dichotomy between statistical and symbolic AI is dissolving. The most powerful and trustworthy AI systems of the future will be hybrids that leverage the strengths of both. For technical leaders, data scientists, and researchers, embracing this neuro-symbolic perspective is no longer a niche interest but a strategic necessity.

Key takeaways include:

- Symbolic AI offers unparalleled transparency, reliability, and data efficiency for tasks involving explicit knowledge and reasoning.

- Its weaknesses in handling perceptual data and its historical brittleness are directly addressed by modern neural networks.

- Neuro-symbolic architectures provide practical patterns for building systems that can learn from data and reason with knowledge.

- The explainability of symbolic components is a critical enabler for ethical AI governance and building user trust.

As we move forward, the ability to architect systems that can fluidly combine sub-symbolic pattern recognition with high-level symbolic reasoning will be a key differentiator for creating truly intelligent applications.