AI Innovation: A Strategic Roadmap for 2025 and Beyond

Table of Contents

- Executive Summary

- Reframing AI Innovation Today

- Core Building Blocks of Modern AI Systems

- Data Foundations and Feature Engineering

- From Prototype to Robust Deployment

- Governance, Ethics, and Security by Design

- Measuring Value and Optimization Strategies

- Roadmap Template for Phased Adoption

- Case Neutral Examples and Learning Templates

- Common Pitfalls and Mitigation Tactics

- Conclusion and Next Steps

- Appendix: Technical Resources and Further Reading

Executive Summary

Artificial Intelligence (AI) has transcended its origins in academic research to become a primary driver of business transformation and competitive advantage. True AI innovation is no longer about isolated experiments but about building a cohesive, scalable, and responsible AI ecosystem. This whitepaper serves as a tactical roadmap for technology leaders, data scientists, and strategists aiming to harness the full potential of AI. We will deconstruct the journey from foundational models to value-driven deployment, providing a discipline-spanning guide that integrates cutting-edge architectures with essential governance frameworks. Our focus is on creating a repeatable and measurable process for sustainable AI innovation, equipping your organization with the strategy needed to thrive in 2025 and beyond.

Reframing AI Innovation Today

The conversation around AI innovation has matured significantly. Early initiatives often focused on achieving state-of-the-art performance on narrow tasks, a necessary but insufficient step for enterprise adoption. Today, the challenge is systemic. It involves weaving AI capabilities into the fabric of the organization, ensuring models are not only accurate but also fair, transparent, and aligned with core business objectives. This requires a paradigm shift from a model-centric view to a system-centric one, where data pipelines, infrastructure, governance, and user feedback loops are all first-class citizens in the AI lifecycle. Lasting AI innovation is an organizational discipline, not just a technical one.

Core Building Blocks of Modern AI Systems

At the heart of any AI strategy lies a set of powerful technologies. Understanding these core components is essential for making informed decisions about where to invest and how to build.

Neural Networks and Advanced Architectures

The foundation of modern deep learning is the artificial neural network, a computational model inspired by the human brain. While foundational, the field has evolved rapidly. Today’s AI innovation leverages sophisticated architectures:

- Transformer Models: Originally designed for natural language tasks, their attention mechanism has proven universally effective, now powering everything from language models to computer vision.

- Convolutional Neural Networks (CNNs): The go-to architecture for image and video analysis, essential for applications in visual search, medical imaging, and autonomous systems.

- Graph Neural Networks (GNNs): Ideal for understanding complex relationships and networks, with applications in fraud detection, recommendation engines, and supply chain optimization.

Large Language Models and Generative Approaches

Large Language Models (LLMs) and Generative AI have captured the world’s attention. These models, trained on vast datasets, can understand and generate human-like text, images, code, and more. Their application is a major frontier for AI innovation, enabling capabilities like advanced chatbots, automated content creation, and sophisticated semantic search. A deep understanding of Natural Language Processing (NLP) is crucial for fine-tuning and deploying these models effectively, tailoring their immense power to specific business contexts.

Reinforcement Learning in Production

Reinforcement Learning (RL) is a paradigm where an agent learns to make optimal decisions by interacting with an environment and receiving rewards or penalties. Once confined to game-playing, RL is now finding practical applications in business:

- Dynamic Pricing: Adjusting prices in real-time based on supply, demand, and competitor behavior.

- Supply Chain Optimization: Optimizing inventory management and logistics routing to reduce costs and delays.

- Personalization Engines: Learning user preferences to deliver highly customized content or product recommendations.

Data Foundations and Feature Engineering

No amount of algorithmic sophistication can overcome poor data. A robust data foundation is the non-negotiable prerequisite for successful AI innovation. This means moving beyond simple data collection to a strategic approach encompassing:

- Data Quality and Governance: Establishing clear ownership, validation rules, and lineage tracking to ensure data is accurate, consistent, and trustworthy.

- Feature Stores: Centralized repositories for curated, production-ready features that can be shared across models, reducing redundant work and ensuring consistency.

- Synthetic Data Generation: Using AI to create artificial data for training models, especially useful for augmenting small datasets or addressing privacy concerns.

From Prototype to Robust Deployment

The journey from a Jupyter notebook to a production-grade AI system is fraught with challenges. A mature MLOps (Machine Learning Operations) practice is essential for bridging this gap and enabling scalable AI innovation.

Scalability and Infrastructure Patterns

As models grow in size and demand, the underlying infrastructure must scale accordingly. Key considerations include:

- Hybrid Cloud Strategies: Leveraging public cloud for flexible compute and specialized hardware (GPUs/TPUs) while keeping sensitive data on-premise.

- Containerization and Orchestration: Using tools like Docker and Kubernetes to package and manage AI applications, ensuring portability and consistent deployment across environments.

- Serverless AI: Utilizing managed services for model inference to automatically scale based on demand, reducing infrastructure management overhead.

Monitoring, Observability, and Incident Playbooks

Once deployed, AI models require constant vigilance. An effective monitoring strategy goes beyond system uptime and includes:

- Model Drift Detection: Tracking changes in data distributions and model performance to identify when a model is no longer accurate and needs retraining.

- Explainability Monitoring: Ensuring that model predictions remain fair and interpretable over time.

- Incident Playbooks: Pre-defined action plans for addressing common failure scenarios, such as data pipeline breaks, sudden performance degradation, or biased outputs.

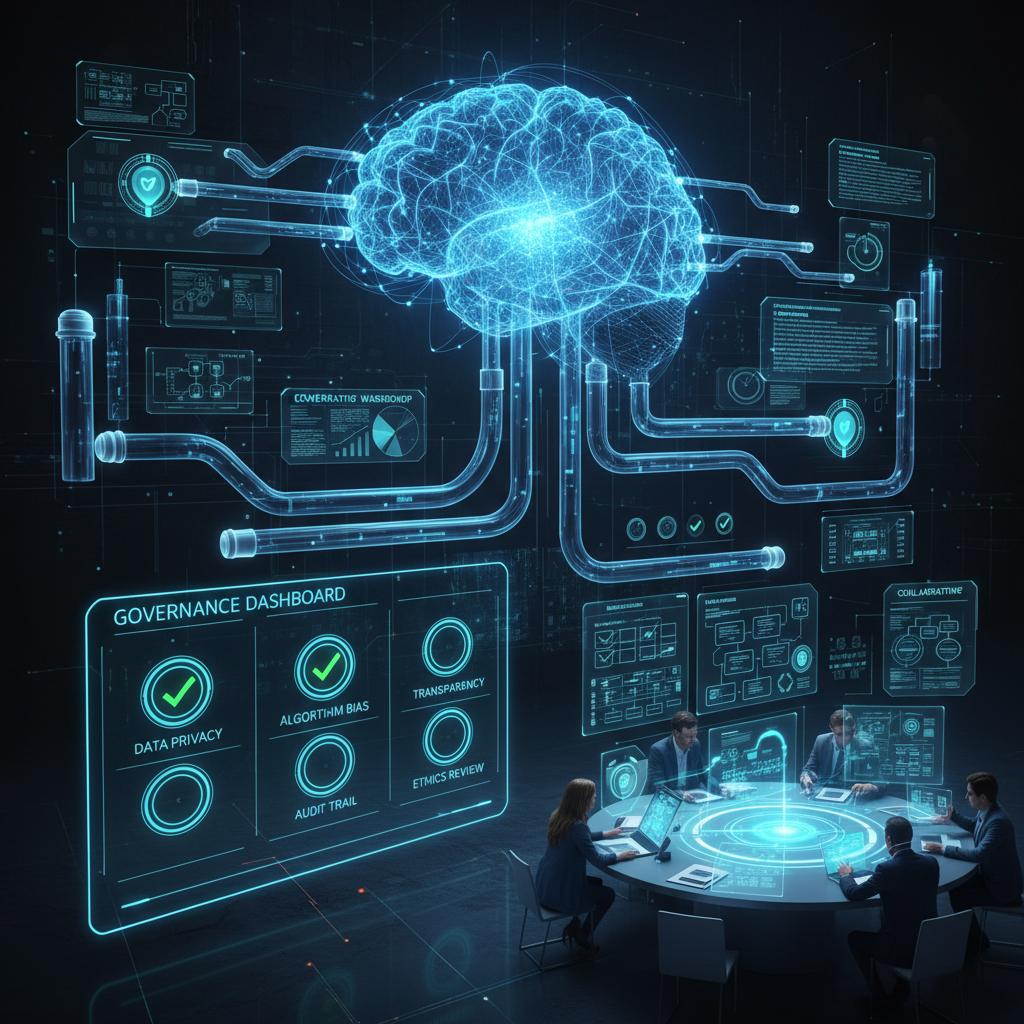

Governance, Ethics, and Security by Design

Trust is the currency of AI innovation. Building robust governance, ethics, and security into the entire AI lifecycle is not an option but a requirement. Adhering to the principles of Responsible AI is critical for mitigating risk and ensuring long-term adoption.

Practical Auditing and Documentation Templates

To make governance tangible, organizations should adopt standardized documentation and auditing practices:

- Model Cards: A short document providing key information about a model’s intended use, performance characteristics, and fairness evaluations.

- Bias and Fairness Audits: Systematically testing models for unintended bias across different demographic groups before and after deployment.

- Data Privacy Impact Assessments: Evaluating how data is collected, used, and stored to ensure compliance with regulations and ethical standards.

Measuring Value and Optimization Strategies

The ultimate goal of AI innovation is to create measurable business value. This requires moving beyond technical metrics like accuracy and F1-score to focus on key performance indicators (KPIs) that resonate with the business. Through techniques like predictive modelling, we can forecast outcomes and tie them directly to business goals.

Success metrics should be defined at the project’s outset and may include:

- Efficiency Gains: Reduction in person-hours for a specific task or process automation.

- Cost Reduction: Savings from optimized operations, such as reduced inventory or lower fraud rates.

- Revenue Growth: Increased sales from better personalization, lead scoring, or dynamic pricing.

- Customer Satisfaction: Improved CSAT or NPS scores from faster, more accurate service.

Roadmap Template for Phased Adoption

A structured, phased approach to AI innovation prevents organizations from becoming overwhelmed. A multi-quarter roadmap allows for iterative progress, learning, and value delivery.

Three Quarter Implementation Checklist (Starting 2025)

Here is a high-level template for a nine-month implementation plan beginning in 2025:

Quarter 1, 2025: Foundational Setup and Strategy

- Establish a cross-functional AI steering committee.

- Identify and prioritize 2-3 high-impact business problems.

- Conduct a data maturity assessment and inventory existing data assets.

- Select a core set of tools for data engineering, model development, and MLOps.

- Develop the initial draft of your organization’s Responsible AI framework.

Quarter 2, 2025: Pilot Project and Governance Framework

- Launch the first pilot project with a clear scope and success metrics.

- Develop the first version of your model documentation (Model Card) template.

- Build and test the initial data and training pipelines.

- Formalize the governance and model review process.

- Conduct initial bias and fairness testing on the pilot model.

Quarter 3, 2025: Scale, Monitor, and Iterate

- Deploy the pilot model in a limited production environment.

- Implement robust monitoring for model performance and data drift.

- Document learnings from the pilot and create a playbook for future projects.

- Begin scoping the next set of AI initiatives based on pilot results.

- Communicate early wins and the business value generated to stakeholders.

Case Neutral Examples and Learning Templates

To make AI innovation concrete, consider these abstract application templates:

- The Optimization Engine: An RL-based system that continuously adjusts parameters in a complex process (e.g., marketing spend, factory line settings, logistics routing) to maximize a target KPI like efficiency or ROI.

- The Insight Extractor: An NLP system that ingests unstructured text (e.g., customer reviews, support tickets, internal documents) to identify emerging trends, sentiment, and compliance risks.

- The Predictive Forecaster: A time-series forecasting model that predicts future demand, customer churn, or potential equipment failure, allowing for proactive intervention.

Common Pitfalls and Mitigation Tactics

Many AI innovation initiatives falter due to predictable challenges. Awareness is the first step toward mitigation.

- Pitfall: Treating AI as a purely technical problem.Mitigation: Build cross-functional teams from day one, including business, legal, and operations stakeholders.

- Pitfall: Starting with a solution in search of a problem.Mitigation: Begin with a well-defined business problem and work backward to determine if AI is the right solution.

- Pitfall: Underestimating data preparation and MLOps effort.Mitigation: Allocate at least 60-70% of project timelines and resources to data engineering, infrastructure, and deployment processes.

- Pitfall: Neglecting governance and ethics until after a problem occurs.Mitigation: Integrate ethical reviews and fairness audits as mandatory gates in the AI development lifecycle.

Conclusion and Next Steps

Sustainable AI innovation is a strategic journey, not a single destination. It requires a holistic approach that balances powerful technology with robust processes, strong data foundations, and a steadfast commitment to responsible development. By reframing AI as a core business discipline and adopting a phased, measurable roadmap, organizations can move beyond hype and build intelligent systems that deliver tangible, lasting value. The framework outlined in this whitepaper provides a blueprint for this journey. Your next step is to adapt this template to your unique context, assemble your core team, and begin the critical work of identifying the business challenges where AI innovation can make the greatest impact.

Appendix: Technical Resources and Further Reading

For teams looking to deepen their technical expertise, we recommend exploring the following areas. This is not an exhaustive list but a starting point for continuous learning in the fast-evolving field of AI innovation.

- Academic Research Papers: Follow major conferences like NeurIPS, ICML, and CVPR for the latest breakthroughs in model architectures and training techniques. Platforms like ArXiv are invaluable for pre-print access.

- Open-Source Frameworks: Gain hands-on experience with foundational libraries such as TensorFlow, PyTorch, scikit-learn, and Hugging Face Transformers.

- MLOps and Tooling: Investigate open-source tools and platforms that support the end-to-end machine learning lifecycle, including MLflow for experiment tracking, Kubeflow for pipeline orchestration, and platforms for data versioning and model monitoring.

- Responsible AI Toolkits: Explore toolkits released by major technology firms and research institutions that provide practical utilities for model explainability, fairness audits, and privacy preservation.