A Practical Guide to Hyperparameter Optimization

Table of Contents

- Introduction to Hyperparameter Optimization

- Common Search Strategies for Hyperparameter Optimization

- Designing an Efficient Tuning Workflow

- Practical Recipes and Heuristics for Common Model Families

- Reproducibility and Experiment Tracking

- Case Study: Tuning a Gradient Boosted Tree with Limited Compute

- Interpreting Results and Avoiding Common Pitfalls

- Advanced Topics and Research Directions

- Appendix: Reproducible Experiment Checklist

- Further Reading and Resources

Introduction to Hyperparameter Optimization

Welcome to this comprehensive guide on Hyperparameter Optimization (HPO). In machine learning, building a powerful model is not just about choosing the right algorithm; it’s also about tuning its settings correctly. These settings, known as hyperparameters, are the knobs and dials that control the learning process itself. This guide provides a framework for choosing the right tuning strategy based on your model complexity and compute budget, with a strong focus on creating reproducible and interpretable experiments.

Defining Hyperparameters and Why They Matter

Unlike model parameters, which are learned from the data during training (like the weights in a neural network), hyperparameters are set by the practitioner before the training process begins. They define the high-level architecture and behavior of the model. For example:

- The learning rate in a neural network.

- The number of trees in a random forest.

- The regularization strength (C) in a Support Vector Machine (SVM).

The right choice of hyperparameters can be the difference between a state-of-the-art model and a mediocre one. Poor choices can lead to slow training, underfitting (the model is too simple), or overfitting (the model memorizes the training data and fails to generalize). The process of finding the optimal set of these values is the core of Hyperparameter Optimization.

How Hyperparameter Choices Affect Model Behavior

Consider tuning the `max_depth` of a decision tree. A small depth might result in a model that cannot capture complex patterns in the data (high bias). A very large depth might cause the model to learn noise and specific details of the training set, failing on new data (high variance). Hyperparameter optimization is the methodical search for the sweet spot in this bias-variance tradeoff.

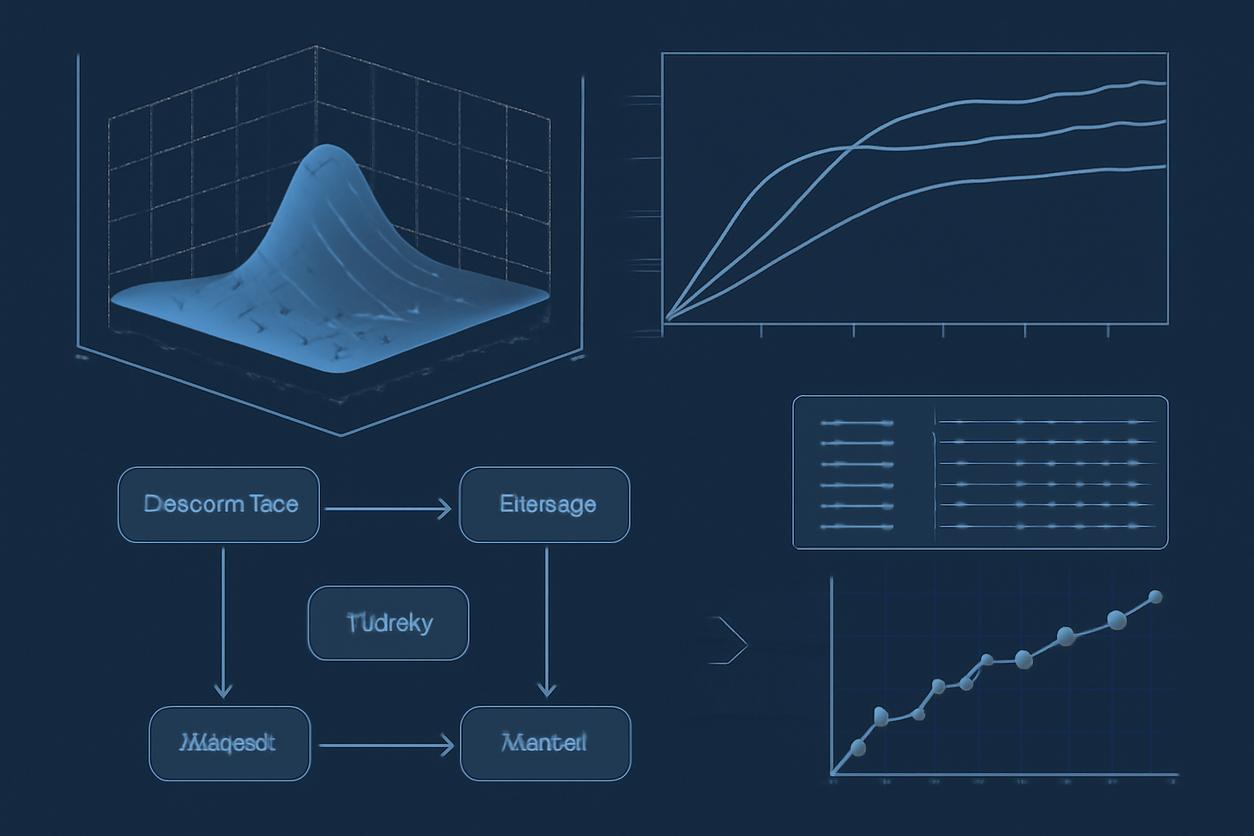

Common Search Strategies for Hyperparameter Optimization

The search for optimal hyperparameters can range from manual guesswork to sophisticated automated strategies. The key is to select a method that balances computational cost with performance gains. Below, we explore the most common strategies, framing them with a modern perspective for challenges we anticipate in 2026 and beyond.

Grid Search: When and Limitations

Grid Search is the most traditional method. You define a discrete set of values for each hyperparameter, and the algorithm exhaustively evaluates every possible combination.

- When to Use: It is best suited for a very small number of hyperparameters (2-3) where you have a good intuition about the potential range of optimal values.

- Limitations: It suffers from the “curse of dimensionality.” As the number of hyperparameters increases, the number of combinations grows exponentially, making it computationally infeasible. It also wastes resources exploring unpromising regions of the search space.

Random Search: Benefits and Practical Tips

Random Search, as the name implies, samples a fixed number of combinations randomly from the hyperparameter search space. It has been shown to be surprisingly effective.

- Benefits: It is more efficient than Grid Search because it does not waste time on less important hyperparameters. By sampling randomly, it has a higher probability of finding good values for the most influential parameters.

- Practical Tips: For continuous values like a learning rate, define a logarithmic distribution (e.g., from 0.0001 to 0.1) to sample from. This ensures you explore different orders of magnitude effectively.

Bayesian Optimization: Intuition and Trade-offs

Bayesian Optimization is a smarter, sequential approach to hyperparameter optimization. It builds a probabilistic model (a “surrogate model,” often a Gaussian Process) of the relationship between hyperparameter settings and the objective metric (e.g., validation accuracy). It then uses an “acquisition function” to intelligently decide which hyperparameter combination to try next.

- Intuition: Think of it as an informed search. After each trial, it updates its beliefs about the search space and balances exploring new, uncertain areas with exploiting areas it already knows are promising.

- Trade-offs: It is highly efficient in terms of the number of trials needed, making it ideal for expensive-to-evaluate models (like deep neural networks). However, the process is inherently sequential and can be harder to parallelize than Random Search. The overhead of fitting the surrogate model can also be non-trivial. For an in-depth look, see the seminal paper on Bayesian optimization.

Multi-Fidelity Methods: Hyperband and Successive Halving

These methods are designed to tackle the problem of expensive model evaluations by using low-fidelity approximations (e.g., training for fewer epochs or on a subset of data). The core idea is to allocate a large budget to promising configurations and quickly discard poor ones.

- Successive Halving (SHA): Starts with a large number of random configurations and a small budget for each. After this initial evaluation, it discards the worst-performing half and doubles the budget for the remaining ones, repeating until one configuration remains.

- Hyperband: This is a more advanced version of SHA that cleverly manages the trade-off between the number of configurations to try and the budget allocated to each. It is one of the most effective and resource-efficient strategies available. You can read the original Hyperband description for details. These multi-fidelity approaches are a cornerstone of modern, efficient hyperparameter optimization.

–

Automated and Population-Based Approaches

Inspired by natural evolution, methods like Genetic Algorithms maintain a “population” of hyperparameter configurations. In each “generation,” the best configurations are selected and combined (“crossover”) and mutated to create a new population. These methods can be very effective for large, complex search spaces but often require more configuration themselves.

Designing an Efficient Tuning Workflow

A successful hyperparameter optimization effort requires more than just picking a search strategy; it needs a well-designed workflow.

Choosing a Validation Strategy and Metrics

Before you start tuning, you must decide how you will measure performance. This is crucial to avoid overfitting on your test set.

- Validation Strategy: Use a dedicated validation set or, more robustly, K-fold cross-validation. In K-fold CV, the training data is split into K folds; the model is trained on K-1 folds and evaluated on the remaining fold, rotating through all K folds. This gives a more stable estimate of the model’s generalization performance. Scikit-learn provides excellent tools for model selection and validation.

- Metrics: Choose a metric that aligns with your business objective. This could be accuracy, F1-score, AUC-ROC, or Mean Absolute Error, depending on the problem.

Budgeting Compute and Parallelization Tactics

Your compute budget (time, money) is a primary constraint. Plan for it explicitly.

- Set a Budget: Define a maximum number of trials or a total time limit for your search.

- Parallelize: Strategies like Grid Search and Random Search are “embarrassingly parallel.” You can evaluate multiple configurations simultaneously on different machines or CPU cores. Bayesian Optimization is harder to parallelize, but modern libraries often support “batched” or “asynchronous” versions to mitigate this.

Practical Recipes and Heuristics for Common Model Families

While every problem is unique, certain hyperparameters are consistently important for different model types.

Neural Networks: Learning Rate and Regularization Schedules

For deep learning, the most critical hyperparameter is often the learning rate. It is also beneficial to tune related parameters simultaneously.

- Learning Rate: Search on a log scale (e.g., between 1e-5 and 1e-1).

- Optimizer: Adam is a robust default, but trying others like SGD with momentum can be beneficial.

- Regularization: Tune dropout rates (e.g., 0.1 to 0.5) and weight decay (L2 regularization) to prevent overfitting.

Tree-Based Models: Depth, Splits, and Regularization

For models like XGBoost, LightGBM, and Random Forests, focus on parameters that control model complexity.

- `n_estimators` (Number of Trees): More is often better, but returns diminish and training time increases. Use early stopping to find a good value automatically.

- `max_depth` (Tree Depth): This is a powerful regularizer. Typical values range from 3 to 10.

- `learning_rate` (Gradient Boosting): A smaller learning rate requires more trees but often leads to better generalization.

- Regularization: `lambda` (L2) and `alpha` (L1) in XGBoost/LightGBM.

Linear Models and Kernel Methods: Key Knobs

For models like Logistic Regression, Ridge, and SVMs, the key is regularization.

- `C` (Regularization Strength): This is the inverse of regularization strength. Smaller values mean stronger regularization. Search on a log scale (e.g., 0.01 to 100).

- `gamma` (SVM Kernel Coefficient): For RBF kernels, this parameter defines the influence of a single training example. Search on a log scale.

Reproducibility and Experiment Tracking

A hyperparameter optimization run that cannot be reproduced is a wasted effort. Rigorous tracking is non-negotiable for professional machine learning. Every experiment should be logged with:

- Code Version: Use a version control system like Git and log the commit hash.

- Data Version: The exact version of the dataset used for training and validation.

- Hyperparameters: The exact set of hyperparameters tested in each trial.

- Random Seed: Set a fixed seed for all sources of randomness to ensure identical results.

- Results: The final performance metrics on the validation and test sets.

Tools like MLflow, Weights and Biases, or even simple spreadsheets can facilitate this. The principles of experiment tracking are fundamental to building reliable systems.

Case Study: Tuning a Gradient Boosted Tree with Limited Compute

Imagine you have a 4-hour compute budget to tune a LightGBM model. A full Grid Search is out of the question. Here is a practical decision framework:

- Strategy Choice: Given the limited budget, a multi-fidelity method like Hyperband is a strong candidate. If the model trains very quickly (e.g., under a minute), a well-budgeted Bayesian Optimization could also work. Let’s choose Hyperband.

- Parameter Space: Define a search space for the most impactful parameters: `learning_rate` (log uniform, 0.01-0.2), `num_leaves` (integer, 20-60), `max_depth` (integer, 4-10), and `reg_lambda` (log uniform, 0.1-10).

- Execution: Use a library like Optuna or Ray Tune that implements Hyperband. Configure it to use early stopping based on validation AUC. The Hyperband scheduler will automatically manage the budget, running many configurations for a few iterations and promoting only the best to receive more resources.

- Outcome: After 4 hours, you get not just the best single configuration but a history of trials. This allows you to see which parameter ranges are most promising, providing valuable insights for future tuning runs.

Interpreting Results and Avoiding Common Pitfalls

The output of a hyperparameter optimization process is more than just a single best value.

- Analyze Parameter Importance: Many tuning libraries can generate plots showing which hyperparameters had the biggest impact on performance. This can guide future searches.

- Look at Parameter Landscapes: Visualize how metrics change as hyperparameters are varied. This helps build intuition.

- Pitfall: Overfitting the Validation Set: If you run thousands of trials, you might find a configuration that works well on your specific validation set purely by chance. Always confirm final performance on a held-out test set that was not used at all during tuning.

- Pitfall: Searching the Wrong Space: If the best-performing trials are all at the edge of your search range, it is a sign that you should expand the range in that direction.

Advanced Topics and Research Directions

The field of hyperparameter optimization is constantly evolving. As we look toward 2026, some key research areas include:

- Multi-Objective Optimization: Optimizing for multiple metrics simultaneously, such as model accuracy and inference latency.

- Neural Architecture Search (NAS): Automating the design of the neural network architecture itself, a more complex form of HPO.

- Zero-Cost Proxies: Methods that try to predict a model’s performance based on its initial state, without requiring full training, drastically speeding up the search.

Appendix: Reproducible Experiment Checklist

Use this checklist for every tuning run to ensure reproducibility.

- [ ] Goal: What is the primary objective of this tuning run?

- [ ] Model: Which model architecture is being tuned?

- [ ] Dataset: What is the version/name of the dataset?

- [ ] Code Version: What is the Git commit hash?

- [ ] Search Strategy: (e.g., Random Search, Hyperband)

- [ ] Search Space: What are the exact ranges for each hyperparameter?

- [ ] Budget: What is the total number of trials or time limit?

- [ ] Validation Scheme: (e.g., 5-Fold CV, Hold-out set)

- [ ] Metric: What is the primary metric being optimized?

- [ ] Random Seed: Has a global seed been set?

- [ ] Results URI: Where are the detailed logs and final results stored?

Further Reading and Resources

- Hyperparameter Optimization (Wikipedia): A good starting point for general concepts.

- Practical Bayesian Optimization of Machine Learning Algorithms: The foundational paper detailing the application of Bayesian methods.

- Hyperband: A Novel Bandit-Based Approach to Hyperparameter Optimization: The paper introducing this efficient and popular strategy.

- Scikit-learn: Model selection and evaluation: Official documentation on cross-validation and evaluation metrics.

- Towards Accountable Research: A Causal-Comparative Approach to Experiment Tracking: An academic perspective on the importance of meticulous experiment tracking.