Neural Networks Unveiled: A Comprehensive Whitepaper for Practitioners

Table of Contents

- Introduction: Why Neural Networks Matter Today

- Core Building Blocks: Neurons, Activations, and Loss Landscapes

- Architectural Families: Feedforward, Convolutional, Recurrent, and Transformer Models

- Training Pipeline: Data, Loss Functions, Optimizers, and Regularization

- Performance Diagnostics: Common Failure Modes and How to Fix Them

- Scaling Strategies: Model Efficiency, Compression, and Serving Patterns

- Responsible Model Design: Fairness, Robustness, and Explainability

- Cross-Domain Snapshots: Healthcare, Finance, and Automation Use Cases

- Practical Walkthrough: A Simple Model from Data to Inference

- Checklists and Templates: Debugging, Evaluation, and Reproducibility

- Resources and Further Reading

- Appendix: Glossary of Terms and Key Equations

Introduction: Why Neural Networks Matter Today

From powering search engines and recommendation systems to enabling breakthroughs in medical diagnostics and autonomous systems, Neural Networks have become the cornerstone of modern artificial intelligence. These computational models, inspired by the structure of the human brain, possess an extraordinary ability to learn complex patterns and relationships directly from data. Unlike traditional algorithms that require explicit programming, neural networks automate the process of feature extraction, making them incredibly powerful for tasks involving unstructured data like images, text, and sound.

This whitepaper serves as a practitioner’s guide to understanding, building, and troubleshooting neural networks. We move from foundational concepts to advanced strategies, blending concise theory with actionable insights. Whether you are an advanced learner looking to solidify your knowledge or a practitioner seeking to refine your workflow, this document provides a structured path from concept to practice in the dynamic field of Neural Networks.

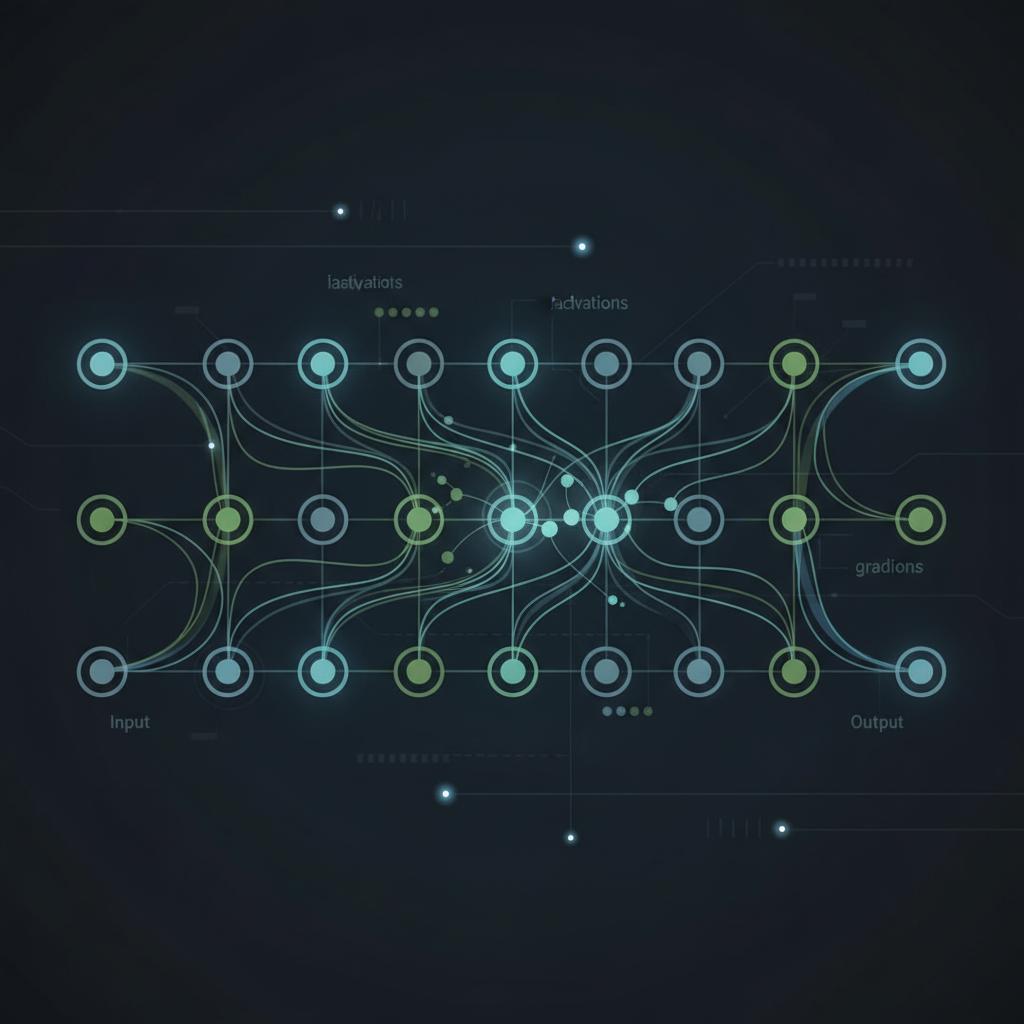

Core Building Blocks: Neurons, Activations, and Loss Landscapes

At their core, all complex neural networks are constructed from a few fundamental components. Understanding these building blocks is the first step toward mastering the technology.

The Artificial Neuron

The simplest unit of a neural network is the artificial neuron, or node. It receives one or more inputs, performs a simple computation, and produces an output. Each input is multiplied by a weight, which signifies its importance. The neuron then sums these weighted inputs, adds a bias term, and passes the result through an activation function.

Activation Functions

An activation function introduces non-linearity into the network, allowing it to learn patterns far more complex than simple linear relationships. Without them, a deep neural network would behave like a single, flat linear model. Common activation functions include:

- Sigmoid: Maps any value to the range (0, 1), often used in the output layer for binary classification.

- Tanh (Hyperbolic Tangent): Maps values to the range (-1, 1), a zero-centered function that often performs better than Sigmoid in hidden layers.

- ReLU (Rectified Linear Unit): Outputs the input directly if it is positive, and zero otherwise. It is computationally efficient and is the most popular choice for hidden layers in modern neural networks.

Loss Landscapes

The training process can be visualized as a journey across a loss landscape. This is a high-dimensional surface where each point represents a specific configuration of the network’s weights, and the height of the point represents the model’s error (or loss). The goal of training is to navigate this landscape to find the lowest possible point—the global minimum—where the model’s error is minimized.

Architectural Families: Feedforward, Convolutional, Recurrent, and Transformer Models

Different problems require different architectures. The arrangement of neurons and their connections defines the family of a neural network.

Feedforward Neural Networks (FNNs)

The most straightforward architecture, where information moves in only one direction—from input to output layers. FNNs, including Multi-Layer Perceptrons (MLPs), are excellent for structured or tabular data, such as predicting customer churn based on account information.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks are specifically designed for processing grid-like data, such as images. They use special layers called convolutional layers to automatically and adaptively learn spatial hierarchies of features, from simple edges and textures to complex objects and scenes. They are the standard for most computer vision tasks.

Recurrent Neural Networks (RNNs)

RNNs are built to handle sequential data, like text or time series. They have loops, allowing information to persist from one step in the sequence to the next. Variants like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) were developed to overcome challenges with learning long-range dependencies, making them effective for language translation and stock market prediction.

Transformer Models

Originally designed for natural language processing (NLP), the Transformer architecture has revolutionized the field. It relies on a mechanism called self-attention to weigh the importance of different words in a sequence, allowing for a more parallelized and often more powerful approach than RNNs. Transformers are now being successfully applied to other domains, including computer vision and biology.

Training Pipeline: Data, Loss Functions, Optimizers, and Regularization

Building a neural network involves more than just designing its architecture. A robust training pipeline is essential for achieving high performance. For a deep dive into these concepts, see this overview of training concepts.

Data Preparation

The quality and preparation of data are paramount. This stage involves cleaning, normalizing or standardizing features to a common scale, and splitting the dataset into training, validation, and testing sets to ensure unbiased evaluation.

Loss Functions

A loss function quantifies the difference between the model’s predictions and the actual ground truth. The choice of loss function depends on the task:

- Mean Squared Error (MSE): Commonly used for regression tasks.

- Binary Cross-Entropy: Used for binary classification problems.

- Categorical Cross-Entropy: Used for multi-class classification problems.

Optimizers

The optimizer is the algorithm that adjusts the network’s weights and biases to minimize the loss function. It uses the gradients of the loss function to navigate the loss landscape. Popular optimizers include Stochastic Gradient Descent (SGD) with momentum and adaptive optimizers like Adam, which adjusts the learning rate for each parameter individually.

Regularization

Regularization techniques are used to prevent overfitting, a scenario where the model performs well on training data but poorly on unseen data. Common methods include:

- L1 and L2 Regularization: Adds a penalty to the loss function based on the magnitude of the weights.

- Dropout: Randomly “drops out” (sets to zero) a fraction of neurons during each training step, forcing the network to learn more robust features.

Performance Diagnostics: Common Failure Modes and How to Fix Them

Even with a well-designed architecture, training neural networks can be challenging. Identifying and fixing common failure modes is a critical skill for any practitioner.

Overfitting and Underfitting

Monitoring training and validation loss curves is the primary way to diagnose these issues. Underfitting occurs when the model is too simple to capture the underlying data patterns (both training and validation loss are high). Overfitting is when the model memorizes the training data, leading to a low training loss but a high and increasing validation loss. Solutions include adding more data, simplifying/complicating the model, or applying regularization.

Vanishing and Exploding Gradients

In very deep neural networks, gradients can become extremely small (vanishing) or large (exploding) as they are propagated backward through the layers. This can stall or destabilize training. This problem can be mitigated by:

- Using ReLU or its variants as activation functions.

- Implementing proper weight initialization schemes (e.g., He or Xavier initialization).

- Using batch normalization.

- Applying gradient clipping (capping the gradient value at a threshold).

Scaling Strategies: Model Efficiency, Compression, and Serving Patterns

As neural networks grow in size and complexity, deploying them efficiently becomes a major challenge. The focus of scaling strategies for 2025 and beyond is on making these powerful models more accessible, faster, and less resource-intensive.

Model Efficiency in 2025 and Beyond

Future strategies will increasingly focus on creating inherently efficient architectures. This involves moving beyond simply stacking layers and instead designing lightweight, specialized operators and search algorithms (Neural Architecture Search) to discover optimal, low-resource models for specific tasks.

Model Compression

Compression techniques reduce the size and computational cost of trained neural networks, making them suitable for deployment on edge devices like smartphones.

- Pruning: Removing redundant or unimportant weights or neurons from the network.

- Quantization: Reducing the precision of the weights from 32-bit floating-point numbers to 8-bit integers or less, significantly cutting down on memory and computational requirements.

Advanced Serving Patterns

For large-scale deployment, advanced serving patterns are essential. By 2025, we expect wider adoption of techniques like model parallelism (splitting a single large model across multiple GPUs) and the use of specialized hardware compilers that optimize trained neural networks for specific inference chips, leading to dramatic speedups.

Responsible Model Design: Fairness, Robustness, and Explainability

With the increasing integration of neural networks into society, designing them responsibly is no longer optional. Following established guidance, such as the Responsible AI guidance from NIST, is crucial.

Fairness and Bias

Neural networks can inadvertently learn and amplify societal biases present in training data. It is critical to audit datasets for bias, use fairness metrics to evaluate model outputs across different demographic groups, and apply bias mitigation techniques during pre-processing, training, or post-processing.

Robustness and Security

Models can be vulnerable to adversarial attacks, where small, imperceptible changes to the input can cause the model to make incorrect predictions. Building robust models involves techniques like adversarial training, where the model is exposed to such examples during the training process to learn to defend against them.

Explainability (XAI)

As neural networks are often “black boxes,” understanding why they make a particular decision is crucial for trust and debugging. Explainable AI (XAI) techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) help provide insights into which input features most influenced a model’s prediction.

Cross-Domain Snapshots: Healthcare, Finance, and Automation Use Cases

The versatility of neural networks is evident in their wide-ranging applications across diverse industries.

Healthcare

In medical imaging, CNNs are used to detect diseases like cancer from X-rays and MRIs with a level of accuracy comparable to or exceeding human radiologists. This application of neural networks is revolutionizing diagnostics.

Finance

Recurrent Neural Networks and Transformers are deployed for algorithmic trading and fraud detection by analyzing patterns in time-series data of market trends and user transactions.

Automation

In robotics and autonomous vehicles, neural networks are used to process sensor data from cameras and LiDAR to perceive the environment, enabling tasks like object detection, path planning, and control.

Practical Walkthrough: A Simple Model from Data to Inference

This section provides a high-level pseudocode walkthrough for building a simple feedforward neural network for a classification task.

Step 1: Data Loading and Preprocessing

function prepare_data(file_path): data = load_csv(file_path) features, labels = separate_features_labels(data) normalized_features = normalize(features) train_x, val_x, train_y, val_y = split_data(normalized_features, labels, split_ratio=0.8) return train_x, val_x, train_y, val_yStep 2: Model Definition

function define_model(input_size, num_classes): model = SequentialModel() model.add(DenseLayer(input_size, 128, activation='relu')) model.add(DenseLayer(128, 64, activation='relu')) model.add(DenseLayer(64, num_classes, activation='softmax')) return modelStep 3: Training Loop

function train_model(model, data): loss_function = CategoricalCrossEntropy() optimizer = AdamOptimizer(learning_rate=0.001) for epoch in 1 to num_epochs: predictions = model.forward(data.train_x) loss = loss_function.calculate(predictions, data.train_y) gradients = loss.backward() optimizer.update_weights(model.parameters, gradients) print(f"Epoch {epoch}, Loss: {loss}")Step 4: Inference

function make_prediction(model, new_data_point): processed_data = normalize(new_data_point) prediction_probabilities = model.forward(processed_data) predicted_class = argmax(prediction_probabilities) return predicted_classChecklists and Templates: Debugging, Evaluation, and Reproducibility

Practical tools can streamline the development process and ensure consistency.

Debugging Checklist

- Check data shape and types: Is the input tensor shape what the model expects?

- Normalize input data: Have you scaled your features to a small range (e.g., 0-1 or -1-1)?

- Start with a small, simple model: Can your model overfit a tiny subset of the data?

- Verify loss function and output activation: Are they appropriate for your task (e.g., Softmax with Cross-Entropy for multi-class)?

- Lower the learning rate: Is your loss exploding or fluctuating wildly? Your learning rate might be too high.

- Visualize activations and gradients: Are layers dying (all-zero activations)? Are gradients flowing?

Evaluation Matrix Template

Use a structured table to compare experiments.

| Model ID | Architecture | Hyperparameters | Validation Accuracy | Validation Loss | Notes |

|---|---|---|---|---|---|

| Exp_001 | FNN (2 layers) | LR=0.01, Adam | 85.2% | 0.34 | Baseline model |

| Exp_002 | FNN (3 layers) | LR=0.01, Adam | 87.5% | 0.29 | Added one hidden layer |

| Exp_003 | FNN (3 layers) | LR=0.001, Adam, Dropout=0.3 | 89.1% | 0.25 | Added dropout, lowered LR |

Reproducibility Notes

To ensure your experiments can be reproduced, always document:

- Random seeds (for libraries like NumPy, PyTorch, TensorFlow).

- Versions of key libraries and programming language.

- The exact dataset version or source.

- All model hyperparameters and the final configuration.

Resources and Further Reading

The field of Deep Learning and neural networks is vast. Here are curated resources to continue your journey:

- General Overview: The Wikipedia article on Artificial Neural Networks provides a broad historical and theoretical context.

- Core Training Concepts: Stanford’s CS231n course notes offer an intuitive and comprehensive explanation of fundamental training processes.

- Model Deployment: For those looking to take their models to production, this paper on model deployment basics covers key challenges and patterns.

- Responsible AI: The NIST AI Risk Management Framework provides essential guidance for building trustworthy AI systems.

Appendix: Glossary of Terms and Key Equations

Glossary of Key Terms

- Backpropagation: The algorithm used to calculate the gradients of the loss function with respect to the network’s weights, enabling efficient training.

- Epoch: One complete pass through the entire training dataset.

- Batch Size: The number of training examples utilized in one iteration.

- Hyperparameter: A configuration that is external to the model and whose value cannot be estimated from data, such as the learning rate or the number of layers.

- Tensor: A multi-dimensional array that is the fundamental data structure used in neural networks.

Key Equations

1. Weighted Sum in a Neuron:

z = (w₁x₁ + w₂x₂ + … + wₙxₙ) + b = W · X + b

Where W is the vector of weights, X is the vector of inputs, and b is the bias.

2. Neuron Output:

a = f(z)

Where f is the activation function (e.g., ReLU or Sigmoid) and z is the weighted sum.