The Pragmatic MLOps Playbook: A Guide to Sustainable Machine Learning

Table of Contents

- Introduction: Why Coordinated Model Operations Matter

- Reframing the Model Lifecycle: From Experimentation to Graceful Retirement

- Core Building Blocks: Data, Code, Models, and Infrastructure

- Deployment Approaches: Blue-Green, Canary, and Progressive Rollout

- Governance and Ethics: Audit Trails, Explainability, and Bias Controls

- Operational Playbook: Routine Checks, Incident Runbooks, and Team Handoffs

- Templates and Checklists: Model Release, Rollback, and Retirement

- Case Vignette: End-to-End Sample

- Appendix: Reproducible Pipeline Snippets and Schema Templates

- Further Reading and Resources

Introduction: Why Coordinated Model Operations Matter

In the world of software development, DevOps transformed how we build, test, and release applications, bringing unprecedented speed and reliability. Machine Learning Operations, or MLOps, is the application of these principles to the machine learning lifecycle. It’s an engineering discipline that aims to unify ML system development (Dev) and ML system deployment (Ops) to standardize and streamline the continuous delivery of high-performing models in production. A solid MLOps practice is the bridge between a promising model in a Jupyter notebook and a reliable, value-generating service integrated into a business process.

Many organizations excel at the “ML” but falter at the “Ops.” A model that performs well on a static test set is only the first step. Without a coordinated operational strategy, that same model can fail silently in production due to shifting data patterns, introduce unforeseen bias, or become a black box that no one can audit or explain. Effective MLOps isn’t just about deployment; it’s a holistic approach that ensures models are robust, reproducible, governed, and continuously monitored throughout their entire lifecycle. This guide provides a pragmatic playbook for implementing MLOps, focusing on sustainable patterns that deliver long-term value.

Reframing the Model Lifecycle: From Experimentation to Graceful Retirement

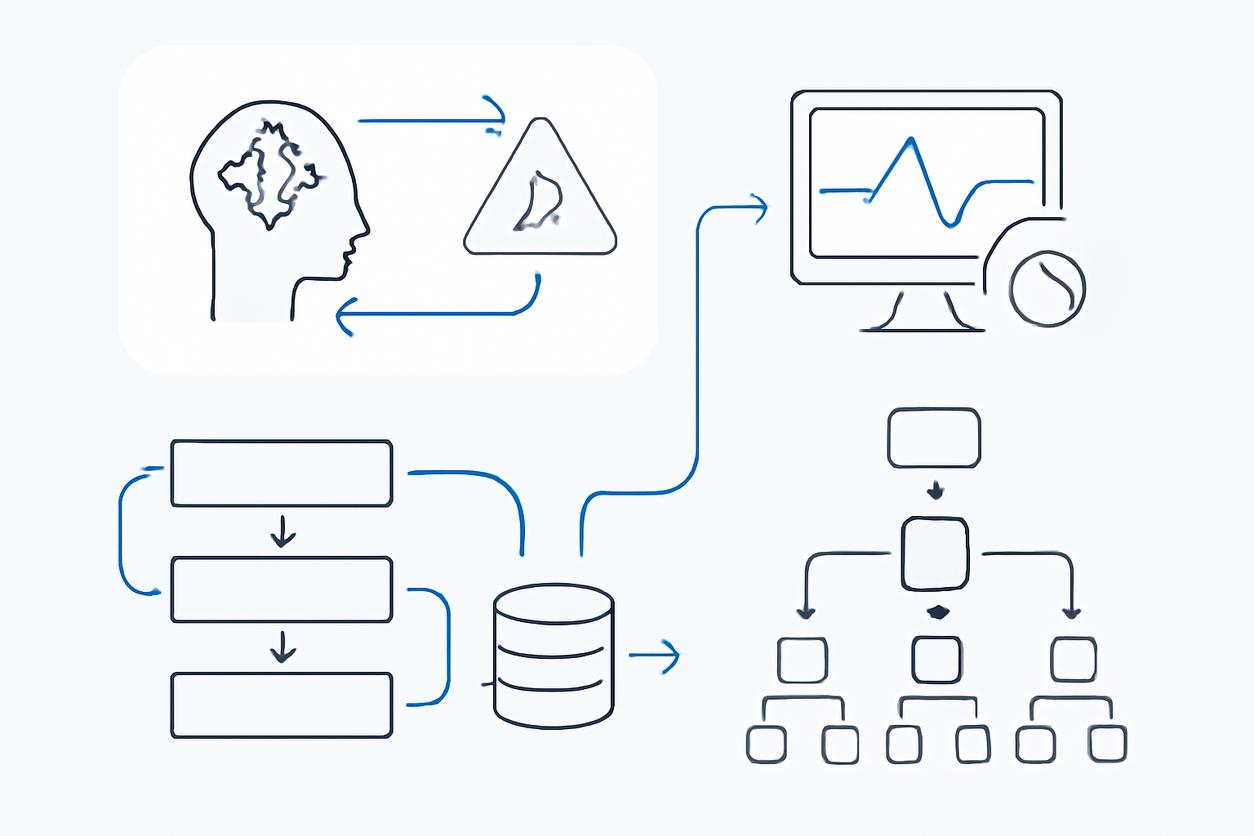

The traditional view of the machine learning lifecycle often over-emphasizes the initial training and experimentation phase. A mature MLOps perspective expands this view to a continuous, iterative loop that includes post-deployment phases crucial for success. This lifecycle doesn’t end at deployment; it extends to monitoring, maintenance, and eventual, planned retirement.

The modern ML lifecycle includes these distinct stages:

- Scoping & Design: Defining the business problem, success metrics (KPIs), and data requirements.

- Data Engineering: Ingesting, cleaning, and transforming data into features for modeling. This is often managed via versioned data pipelines.

- Experimentation & Development: Iteratively training and evaluating models to find the best performer. All experiments should be tracked.

- Validation & Testing: Rigorously testing the model candidate against predefined criteria, including performance, fairness, and robustness.

- Deployment & Integration: Packaging the model and releasing it into the production environment via a controlled strategy.

- Monitoring & Observability: Actively tracking model performance, data drift, and business impact post-deployment.

- Retraining & Iteration: Using monitoring feedback to trigger automated or manual retraining to maintain performance.

- Governance & Audit: Maintaining records of model versions, decisions, and predictions for compliance and accountability.

- Retirement: Decommissioning a model when it is no longer effective, relevant, or is being replaced by a superior version.

Core Building Blocks: Data, Code, Models, and Infrastructure

A robust MLOps foundation rests on four core pillars. Managing these components with rigor is non-negotiable for achieving reproducibility and reliability.

- Data: Raw data, features, and labels. It must be versioned, validated, and accessible.

- Code: Scripts for data processing, feature engineering, model training, and inference. This code should live in a version control system like Git.

- Models: The trained artifacts (e.g., weights, serialized objects). These should be versioned and stored in an artifact or model registry.

- Infrastructure: The underlying compute, storage, and networking resources, often managed as code (IaC) using tools like Terraform or CloudFormation for consistency.

Designing Reproducible Pipelines: Versioning and Artifact Management

Reproducibility is the cornerstone of trustworthy MLOps. If you cannot recreate a model and its predictions given the same inputs, you cannot debug it, audit it, or confidently improve it. A reproducible pipeline is an automated workflow that orchestrates the entire process from data ingestion to model deployment.

Key practices for reproducibility include:

- Version Everything: Every component must be versioned. Use Git for code, data versioning tools (like DVC) for datasets, and a model registry for trained artifacts. Each production model should be traceable to a specific commit hash, data version, and model artifact version.

- Artifact Management: Store all outputs (cleaned data, serialized models, evaluation reports) in a centralized artifact repository. This prevents re-running expensive computations and provides a clear lineage of how an asset was created.

- Containerization: Use containers (e.g., Docker) to package the application, including all its dependencies. This ensures that the training and inference environments are identical and portable across different systems.

Validation Strategies: Automated Tests for Data and Models

Automated validation is your system’s immune response. It catches issues before they impact production. Your CI/CD (Continuous Integration/Continuous Deployment) pipeline should incorporate robust, automated checks.

- Data Validation: These tests run before training to ensure data quality.

- Schema Checks: Verify that the input data has the expected columns, data types, and format.

- Distributional Checks: Test for significant shifts in the statistical properties (mean, median, variance) of key features between the training data and new, incoming data.

- Business Logic Checks: Ensure data conforms to domain-specific rules (e.g., age cannot be negative, product IDs must exist in the master database).

- Model Validation: These tests run after training to qualify a model for release.

- Performance Testing: Automatically evaluate the model against key metrics (e.g., accuracy, precision, F1-score) on a held-out test set. The model must outperform a predefined baseline or the current production model.

- Fairness & Bias Testing: Analyze model performance across different demographic segments to identify and mitigate potential biases.

- Robustness Testing: Check model behavior on edge cases or perturbed data to ensure it fails gracefully.

Deployment Approaches: Blue-Green, Canary, and Progressive Rollout

Deploying a new machine learning model into a live environment carries inherent risk. A disciplined deployment strategy mitigates this risk by providing control and a clear rollback path.

- Blue-Green Deployment: This strategy involves maintaining two identical production environments, “Blue” (the current version) and “Green” (the new version). All live traffic is initially directed to Blue. Once the Green environment is ready and tested, the router switches all traffic from Blue to Green. This allows for near-instantaneous rollback by simply switching the traffic back to Blue if issues arise.

- Canary Deployment: In a canary release, the new model version is rolled out to a small subset of users (the “canaries”). The team monitors performance and business metrics for this group. If the new model performs as expected, its exposure is gradually increased until it serves 100% of traffic. This approach minimizes the blast radius of a faulty deployment.

- Progressive Rollout: This is a more general term that encompasses canary and other staged rollouts. The core idea is to incrementally expose the new model version while continuously monitoring its impact. This could involve A/B testing the new model against the old one and using statistical measures to determine a winner before fully committing.

Monitoring and Observability: Drift Detection and Business Metrics

Deployment is not the finish line. A model’s performance inevitably degrades over time. A comprehensive monitoring and observability strategy is essential for detecting this degradation and acting on it.

Key areas to monitor include:

- Data and Concept Drift:

- Data Drift: This occurs when the statistical properties of the input data change. For example, a fraud detection model trained on pre-pandemic data may see its performance drop as consumer spending habits change. Monitoring feature distributions is key to catching this.

- Concept Drift: This occurs when the relationship between the input features and the target variable changes. The model’s underlying assumptions become invalid. For instance, what constitutes a “spam” email evolves as spammers change their tactics.

- Model Performance Metrics: Track core evaluation metrics (e.g., AUC, log-loss) on live data. This requires a feedback loop to obtain ground truth labels, which may not always be available in real-time.

- Business KPIs: The ultimate measure of a model’s success is its impact on the business. Tie model performance to key business indicators. For a product recommendation model, this could be click-through rate or conversion rate. A drop in the KPI may be the first sign of a model issue.

Governance and Ethics: Audit Trails, Explainability, and Bias Controls

As AI becomes more integrated into critical systems, governance and ethics become central to any MLOps practice. A forward-looking strategy for 2025 and beyond must embed these principles directly into the operational workflow.

- Audit Trails and Lineage: Every prediction made by a production model should be logged and traceable. An audit trail should allow you to answer key questions: Which model version made this prediction? What version of the code and data was it trained on? What were the input features? This is critical for debugging, compliance (e.g., GDPR), and building trust.

- Explainability (XAI): For high-stakes decisions, models cannot be “black boxes.” Integrate tools and techniques (e.g., SHAP, LIME) that explain individual predictions. This helps operators understand why a model made a specific decision and provides recourse for affected users.

- Bias and Fairness Controls: Proactively test for and mitigate bias during both development and production monitoring. Define fairness metrics relevant to your use case and establish automated checks to ensure the model does not disproportionately harm specific demographic groups. This aligns with broader responsible AI frameworks.

Scaling Practices: Parallelism, Orchestration, and Cost-Aware Design

As your organization’s use of ML grows, your MLOps infrastructure must scale with it. Designing for scale from the beginning prevents future bottlenecks.

- Orchestration: Use workflow orchestration tools (e.g., Kubeflow Pipelines, Airflow) to manage complex, multi-step pipelines. These tools handle dependency management, scheduling, retries, and parallel execution, freeing engineers from manual coordination.

- Parallelism: Design your training and processing jobs to be parallelizable. Techniques like distributed training can dramatically reduce the time it takes to train large models on massive datasets.

- Cost-Aware Design: Cloud resources are not free. Implement cost management best practices, such as using autoscaling compute clusters, leveraging spot instances for non-critical workloads, and setting up budget alerts. Your infrastructure-as-code should specify appropriately sized resources, not overprovisioned ones.

Operational Playbook: Routine Checks, Incident Runbooks, and Team Handoffs

An operational playbook codifies your team’s processes for managing models in production. It turns reactive firefighting into proactive management.

- Routine Checks: Schedule regular reviews of model performance dashboards. This could be a weekly or bi-weekly meeting where the on-call engineer or model owner presents key metrics and flags any worrying trends.

- Incident Runbooks: When a monitoring alert fires (e.g., “Significant Data Drift Detected”), the on-call person should have a step-by-step guide—a runbook—to follow. This document should include:

- What the alert means.

- Initial diagnostic steps (e.g., queries to run, dashboards to check).

- Escalation procedures (who to contact and when).

- Standard remediation actions (e.g., triggering a retraining pipeline, initiating a model rollback).

- Team Handoffs: Clearly define model ownership. When a data scientist hands a model over to an ML engineer for productionization, there should be a formal process that includes documentation, a review of the validation results, and a walkthrough of the training pipeline.

Templates and Checklists: Model Release, Rollback, and Retirement

Use standardized templates to ensure consistency and completeness across all your ML projects.

Model Release Checklist

- [ ] Code is peer-reviewed and merged to the main branch.

- [ ] All unit and integration tests are passing.

- [ ] Data validation tests on the training dataset have passed.

- [ ] Model performance on the holdout set exceeds the production baseline.

- [ ] Fairness and bias analysis has been conducted and reviewed.

- [ ] Model artifact is versioned and saved in the model registry.

- [ ] Deployment configuration (e.g., canary percentage) is reviewed and approved.

- [ ] Monitoring dashboards and alerts for the new version are configured.

Model Rollback Plan

- Detection: A critical issue is identified via an alert or manual observation (e.g., spike in prediction errors, drop in business KPI).

- Decision: The on-call engineer and model owner confirm the issue is tied to the new model and approve the rollback.

- Execution: The deployment system is instructed to revert to the previous stable model version (e.g., by switching traffic in a blue-green setup or scaling the canary to 0%).

- Post-mortem: Conduct a root cause analysis to understand why the issue was not caught before release.

Model Retirement Checklist

- [ ] A replacement model has been successfully deployed and validated.

- [ ] All upstream systems have been redirected to the new model’s API endpoint.

- [ ] A final monitoring period confirms the old model is receiving no more traffic.

- [ ] The model’s serving infrastructure is decommissioned.

- [ ] The model artifact is archived (not deleted) in the model registry.

- [ ] Team documentation is updated to reflect the retirement.

Case Vignette: End-to-End Sample

A financial services company developed a model to predict customer churn. Their MLOps process followed this path: After initial development, the model was committed to a CI/CD pipeline. The pipeline automatically ran data validation checks on the training data, trained the model, and then evaluated it against the current champion model. Upon passing, it was packaged in a Docker container and deployed using a canary strategy, initially serving 10% of traffic. The monitoring dashboard tracked both model accuracy and the actual churn rate of the canary group. After three weeks, an alert fired for data drift in the “average transaction value” feature. The on-call ML engineer consulted the runbook, confirmed the drift was impacting model performance, and initiated a controlled rollback to the previous version while the data science team investigated the root cause. This disciplined MLOps practice prevented a significant business impact and provided a clear path to remediation.

Appendix: Reproducible Pipeline Snippets and Schema Templates

To make these concepts concrete, here are simplified, language-agnostic examples of what these artifacts might look like.

Example Pipeline Definition (in a YAML-like format)

name: churn_model_training_pipelinetrigger: on_new_data_commitsteps: - name: data_validation script: run_data_tests.py inputs: - data/raw_customer_data.csv params: - schemas/customer_schema.json - name: feature_engineering script: build_features.py inputs: - data/raw_customer_data.csv outputs: - data/features.parquet - name: model_training script: train_model.py inputs: - data/features.parquet outputs: - models/churn_predictor.pkl - name: model_validation script: evaluate_model.py inputs: - models/churn_predictor.pkl params: - performance_threshold: 0.85 # AUCExample Data Schema Template (in a JSON-like format)

{ "dataset_name": "customer_data_v1", "features": [ { "name": "customer_id", "type": "string", "required": true }, { "name": "age", "type": "integer", "min_value": 18, "max_value": 100 }, { "name": "account_balance", "type": "float", "allow_null": false }, { "name": "account_type", "type": "string", "allowed_values": ["checking", "savings", "investment"] } ]}Further Reading and Resources

This guide serves as a starting point. The field of MLOps is vast and constantly evolving. To deepen your understanding, explore these foundational topics.

- MLOps Overview: A comprehensive introduction to the principles and terminology of Machine Learning Operations.

- DataOps: Explore the principles of DataOps, which focuses on automating and managing data pipelines, a critical dependency for any MLOps system.

- AIOps: Learn about AIOps (AI for IT Operations), which involves using machine learning to automate and improve IT operations, sometimes complementing MLOps tooling.