Mastering Artificial Intelligence Deployment: A 2025 Playbook

This guide provides a comprehensive, deployment-first playbook for technical leaders, AI engineers, and data architects. It moves beyond theoretical models to offer a structured approach to successful Artificial Intelligence Deployment, combining architectural patterns, operational checklists, and governance frameworks essential for delivering real-world value.

Table of Contents

- Introduction: Why Deployment Shapes AI Value

- Readiness Assessment: Data, Infrastructure, and Skills

- Deployment Architecture Options: Batch, Streaming, and Edge

- Model Packaging and Reproducibility Practices

- Orchestration and Scalable Serving Patterns

- Data Pipelines, Feature Stores, and Lineage

- Monitoring, Observability, and Drift Detection

- Governance, Auditing, and Responsible AI Controls

- Security and Access Management for Live Models

- Performance Optimization and Cost Awareness

- Operator Workflows and Runbooks

- Common Deployment Failures and Recovery Strategies

- Deployment Checklist: Pre-Launch and Post-Launch

- Appendix: Sample Configuration and Schema

- Further Reading and Implementation Resources

Introduction: Why Deployment Shapes AI Value

An AI model, no matter how accurate in a development environment, provides zero business value until it is successfully deployed into production. The process of Artificial Intelligence Deployment is the critical bridge between experimental research and tangible operational impact. It is where algorithms meet reality—handling live data, serving user requests, and integrating with existing business processes. A flawed deployment strategy can lead to technical debt, poor performance, and a complete failure to achieve ROI. Conversely, a robust, scalable, and well-monitored deployment practice ensures that AI initiatives are reliable, sustainable, and capable of adapting to a changing world.

Readiness Assessment: Data, Infrastructure, and Skills

Before embarking on any Artificial Intelligence Deployment, a thorough readiness assessment is crucial. This foundational step prevents costly rework and aligns technical efforts with organizational capabilities.

Data Integrity and Governance

Data is the lifeblood of any AI system. Ensure your data foundation is solid by assessing:

- Data Quality: Check for completeness, accuracy, consistency, and timeliness. Poor quality data in production will cripple model performance.

- Data Accessibility: Can production systems access the necessary data sources with appropriate permissions and low latency?

- Governance and Compliance: Are data handling practices compliant with regulations like GDPR or CCPA? Adhering to the FAIR Principles (Findable, Accessible, Interoperable, Reusable) provides a strong framework for data governance.

Infrastructure and Tooling

Your infrastructure must support the entire MLOps lifecycle, from training to serving. Key considerations include:

- Compute Resources: Evaluate the availability of CPUs, GPUs, or other specialized hardware for both training and inference.

- Scalability: Does the platform (e.g., Kubernetes, serverless functions) support auto-scaling to handle variable loads?

- MLOps Stack: Have you selected and integrated tools for experimentation tracking, model registry, orchestration, and monitoring?

Team Skills and Structure

Successful deployment requires a multi-disciplinary team. An honest skills gap analysis is essential. Required roles often include:

- AI/ML Engineers: Design and build models, but also understand software engineering principles for production-grade code.

- Data Engineers: Build and maintain robust, scalable data pipelines.

- MLOps/DevOps Engineers: Manage CI/CD pipelines, infrastructure, and deployment automation.

- Data Architects: Design the overall data ecosystem, including feature stores and data lakes.

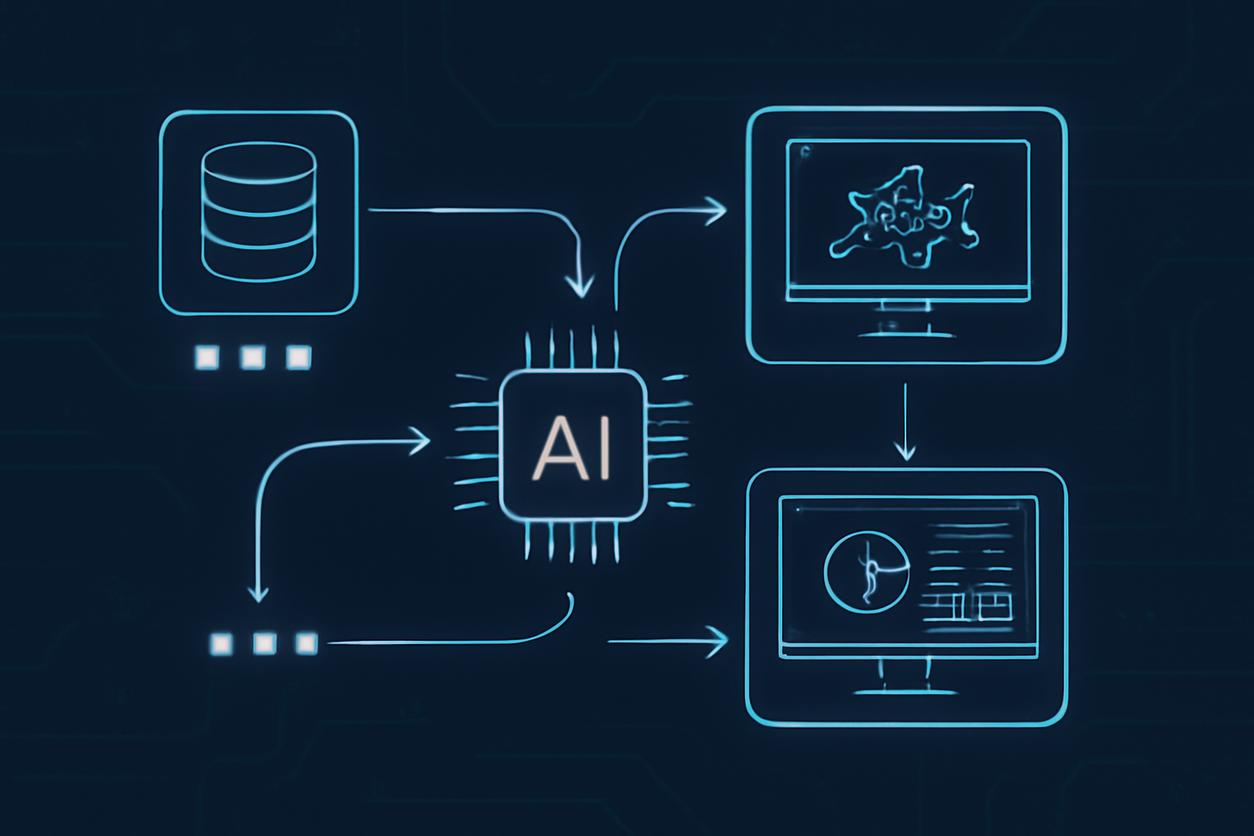

Deployment Architecture Options: Batch, Streaming, and Edge

The choice of deployment architecture depends entirely on the use case’s requirements for latency, scale, and connectivity. Modern strategies for Artificial Intelligence Deployment in 2025 and beyond fall into three main categories.

Batch Deployment (Asynchronous)

In this pattern, predictions are generated on a schedule (e.g., hourly, daily) for a large set of inputs at once. The results are stored in a database or data warehouse for later use.

- Best for: Non-real-time applications like lead scoring, customer segmentation, and demand forecasting.

- Pros: High throughput, cost-effective, computationally efficient.

- Cons: High latency; predictions are not available on-demand.

Streaming Deployment (Synchronous/Real-Time)

The model is exposed as an API endpoint (often a REST or gRPC service) that provides predictions on-demand for single or small-batch inputs. This is the most common pattern for interactive applications.

- Best for: Fraud detection, real-time recommendations, and dynamic pricing.

- Pros: Low latency, provides immediate predictions.

- Cons: Can be more complex to scale and manage; requires a highly available serving infrastructure.

Edge Deployment

The model is deployed directly onto an end-user device, such as a smartphone, vehicle, or IoT sensor. Inference happens locally without needing to call a central server.

- Best for: Applications requiring ultra-low latency, offline functionality, or data privacy, like autonomous vehicles or on-device voice assistants.

- Pros: Minimal latency, works without an internet connection, enhances data privacy.

- Cons: Constrained by device compute and memory; model updates are more complex to roll out.

Model Packaging and Reproducibility Practices

For a deployment to be reliable, it must be reproducible. This means packaging not just the model weights, but the entire environment required to run it.

- Containerization: Using tools like Docker is the industry standard. A container bundles the model artifact, inference code, and all system dependencies into a single, portable image. This guarantees consistency across development, testing, and production environments.

- Model Versioning: Every model pushed to production should be versioned and stored in a model registry (e.g., MLflow, SageMaker Model Registry). This registry should link the model version to the training code, dataset, and hyperparameters used to create it.

- Standardized Formats: Using interoperable formats like ONNX (Open Neural Network Exchange) decouples the model from its training framework, allowing for more flexible and optimized serving.

Orchestration and Scalable Serving Patterns

Serving a model to one user is simple; serving it to millions requires sophisticated orchestration and scaling strategies.

- Container Orchestration: Kubernetes has become the de facto standard for managing and scaling containerized applications, including AI models. It handles service discovery, load balancing, and self-healing.

- Dedicated Serving Frameworks: Tools like KServe, Seldon Core, and BentoML are built on top of Kubernetes and provide advanced ML-specific features like model explainability, outlier detection, and complex inference graphs out-of-the-box.

- Deployment Strategies for 2025: Move beyond simple deployments. Use canary releases to route a small fraction of traffic to a new model version to test its performance before a full rollout. Implement A/B testing to compare the business impact of two or more models in a live environment.

Data Pipelines, Feature Stores, and Lineage

The operational backbone of any production AI system is its data infrastructure. A robust Artificial Intelligence Deployment relies heavily on automated and reliable data flows.

Automated Data Pipelines

Production data pipelines must be automated, fault-tolerant, and monitored. They feed the model with fresh data for inference and are also used in retraining loops. Tools like Apache Airflow, Prefect, or cloud-native solutions (e.g., AWS Step Functions, Google Cloud Composer) are essential for orchestration.

Feature Stores

A feature store is a centralized repository for storing, retrieving, and managing ML features. Its primary purpose is to solve the problem of training-serving skew by ensuring that the exact same feature engineering logic is used during both model training and real-time inference. This is a cornerstone of reliable MLOps.

Data and Model Lineage

Lineage tracking provides a complete audit trail of your AI system. It answers critical questions like: “Which dataset was used to train this specific model version?” and “Which features are being served by this API endpoint?”. This is indispensable for debugging, compliance, and governance.

Monitoring, Observability, and Drift Detection

Once deployed, a model’s job is just beginning. Continuous monitoring is non-negotiable for maintaining performance and reliability.

- Operational Monitoring: Track standard software metrics like latency, throughput (QPS), CPU/GPU utilization, and error rates. Set up alerts for anomalies.

- Model Performance Monitoring: If ground truth labels are available, track accuracy, precision, recall, or other relevant business metrics over time.

- Drift Detection: This is critical.

- Data Drift: Occurs when the statistical properties of the input data change (e.g., a new category of user appears). This signals that the model is seeing data it was not trained on.

- Concept Drift: Occurs when the relationship between inputs and outputs changes (e.g., user preferences shift due to external events).

Implement statistical monitoring on input features and model predictions to automatically detect drift and trigger alerts or retraining pipelines.

Governance, Auditing, and Responsible AI Controls

As AI becomes more pervasive, governance and responsible practices are moving from a “nice-to-have” to a legal and ethical necessity. A mature Artificial Intelligence Deployment strategy must incorporate these controls from the start.

- Model Explainability: Use tools like SHAP or LIME to understand and explain individual model predictions. This is crucial for debugging, building user trust, and meeting regulatory requirements.

- Bias and Fairness Audits: Proactively test models for bias across different demographic subgroups before and after deployment.

- Documentation and Transparency: Maintain “model cards” or similar documentation that details a model’s intended use, performance characteristics, and limitations.

- Regulatory Frameworks: Be aware of and design for compliance with emerging regulations and standards, such as the EU AI Act, and follow best practices outlined in frameworks like the NIST AI Risk Management Framework and the OECD AI Principles.

Security and Access Management for Live Models

A deployed AI model is a software asset that must be secured like any other production service.

- Endpoint Security: Secure API endpoints with authentication and authorization. Use API gateways to manage rate limiting and access control.

- Data Security: Encrypt sensitive data both in transit (TLS) and at rest. Ensure the model cannot inadvertently leak personally identifiable information (PII).

- Access Control: Implement Role-Based Access Control (RBAC) to manage who can deploy, update, or access models and their underlying data.

- Vulnerability Scanning: Regularly scan container images and code dependencies for known security vulnerabilities.

Performance Optimization and Cost Awareness

An effective model must meet performance requirements (latency, throughput) within an acceptable cost envelope.

- Model Optimization: Techniques like quantization (reducing numerical precision) and pruning (removing unnecessary model weights) can dramatically reduce model size and speed up inference with minimal impact on accuracy.

- Hardware Acceleration: Utilize specialized hardware like GPUs or TPUs for computationally intensive models.

- Cost Management: Employ cloud cost optimization strategies such as auto-scaling to match resources to demand, using spot instances for non-critical batch workloads, and right-sizing compute instances to avoid over-provisioning.

Operator Workflows and Runbooks

Do not wait for a production incident to figure out how to respond. Runbooks are detailed, step-by-step guides for handling common operational tasks and emergency situations.

Essential runbooks for an AI service include:

- Model Retraining and Deployment: A standardized workflow for promoting a new model to production.

- Model Rollback: A one-click or automated procedure to revert to a previous, stable model version if the new one underperforms or fails.

- Alert Response: Clear instructions for on-call engineers on how to diagnose and mitigate alerts related to high latency, errors, or model drift.

Common Deployment Failures and Recovery Strategies

Anticipating failure is key to building resilient systems. Here are common failure modes in Artificial Intelligence Deployment and how to recover:

- Training-Serving Skew:

- Symptom: Model performance in production is significantly worse than in offline evaluation.

- Recovery: Implement a feature store to guarantee consistency. Add data validation steps to the inference pipeline to catch discrepancies.

- Model Staleness (Drift):

- Symptom: Model performance degrades slowly over time.

- Recovery: Use drift detection monitoring to trigger an automated retraining pipeline with fresh data.

- Upstream Data Failure:

- Symptom: The model’s input data pipeline breaks or provides corrupt data.

- Recovery: Implement data quality checks and circuit breakers in the pipeline. Have a fallback strategy, such as serving default predictions or a simpler model.

Deployment Checklist: Pre-Launch and Post-Launch

Use this compact checklist to ensure a disciplined and thorough deployment process.

Pre-Launch Validation Checklist

- [ ] Model code and training scripts are version-controlled.

- [ ] Model artifact is versioned and stored in a model registry.

- [ ] Container image is built and stored in a container registry.

- [ ] Security scans for vulnerabilities have passed.

- [ ] Load and performance tests meet latency and throughput requirements.

- [ ] Data validation schemas for inputs and outputs are in place.

- [ ] Monitoring dashboards and alerts are configured.

- [ ] Rollback procedure has been tested.

- [ ] Model documentation (e.g., model card) is complete.

Post-Launch Routine Checklist

- [ ] (Day 1) Monitor dashboards closely for anomalies in operational and performance metrics.

- [ ] (Week 1) Review model prediction distributions and compare against training data.

- [ ] (Weekly) Check drift detection metrics.

- [ ] (Monthly) Review resource utilization and costs; adjust scaling policies.

- [ ] (Quarterly) Evaluate if the model still meets business objectives and consider a full retrain or rebuild.

Appendix: Sample Configuration and Schema

Sample Dockerfile for Model Packaging

# Use a specific, versioned base imageFROM python:3.9-slim# Set a working directoryWORKDIR /app# Copy requirements and install dependenciesCOPY requirements.txt .RUN pip install --no-cache-dir -r requirements.txt# Copy model artifact and inference codeCOPY ./model /app/modelCOPY ./serve.py /app/serve.py# Expose the port the app runs onEXPOSE 8080# Define the command to run the applicationCMD ["python", "serve.py"]Sample JSON Schema for Input Validation

{ "title": "LoanApplicationRequest", "type": "object", "properties": { "applicant_age": { "type": "integer", "minimum": 18 }, "loan_amount": { "type": "number", "exclusiveMinimum": 0 }, "credit_score": { "type": "integer", "minimum": 300, "maximum": 850 } }, "required": ["applicant_age", "loan_amount", "credit_score"]}Further Reading and Implementation Resources

The field of Artificial Intelligence Deployment is constantly evolving. Stay informed by consulting these authoritative resources:

- Research Papers: For the latest advancements in MLOps and machine learning systems, browse the Computer Science section on ArXiv Machine Learning.

- AI Governance and Risk: Understand the principles of safe and trustworthy AI through the NIST AI Risk Management Framework.

- Global AI Principles: For high-level policy and ethical guidelines, refer to the OECD AI Principles.

- Regulatory Landscape: Keep track of legal developments like the EU AI Act.