Executive summary — Key takeaways and scope

Neural Networks are computational models inspired by the structure and function of the human brain, forming the backbone of modern artificial intelligence and deep learning. This whitepaper provides technical leaders and practitioners with a consolidated guide to the principles, architectures, and practical deployment of these powerful systems. We move beyond introductory concepts to focus on actionable insights for designing, training, and maintaining robust and interpretable neural networks. Key takeaways include the critical role of inductive biases in architecture selection, the necessity of rigorous training and regularization strategies, and the growing importance of model interpretability. We conclude with a deployment-ready checklist and a forward-looking perspective on responsible AI governance, providing a holistic framework for leveraging neural networks effectively and ethically.

What neural networks internalize — Representations and abstraction

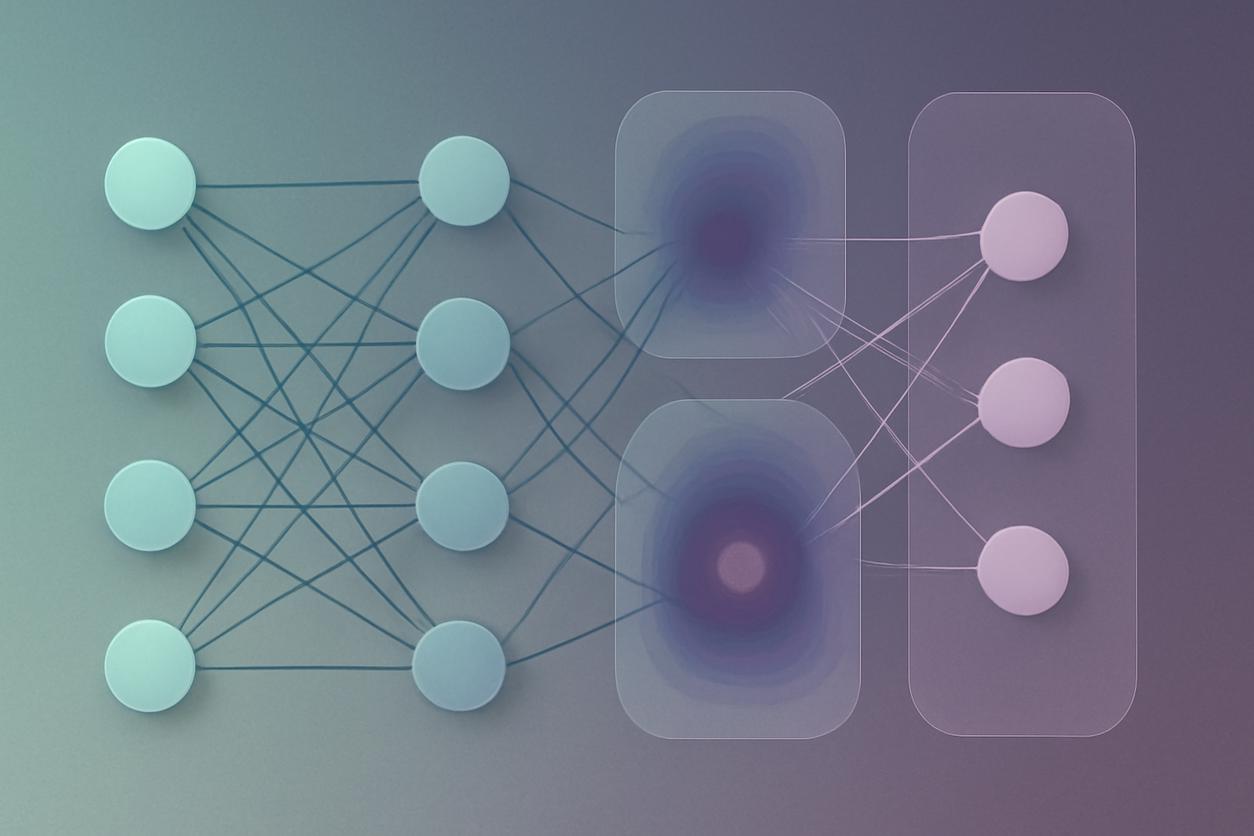

At their core, neural networks are powerful feature extractors. Unlike traditional machine learning where features are hand-engineered, neural networks learn a hierarchy of representations directly from data. In the initial layers, the network might learn to detect simple patterns like edges, textures, or color gradients. As data propagates through subsequent layers, these simple features are combined to form more complex and abstract concepts. For an image recognition model, this could mean progressing from edges to shapes like eyes and noses, and finally to the abstract representation of a complete face.

This process is known as representation learning. The quality of these learned representations dictates the model’s performance. A well-trained neural network internalizes a compressed, salient, and hierarchical understanding of the input data distribution. This ability to automatically build layers of abstraction is what allows neural networks to tackle complex, high-dimensional problems in domains like computer vision, natural language processing, and scientific discovery, where manual feature engineering is infeasible. The entire process is made possible by training algorithms like backpropagation, which fine-tunes the network’s parameters to improve its representations iteratively.

Reinterpreting core architectures

The architecture of a neural network is not a generic template but a deliberate choice that embeds assumptions about the data. This pre-programmed assumption, or inductive bias, is crucial for efficient learning. Selecting the right architecture is about aligning the model’s inherent biases with the underlying structure of the problem.

Convolutional architectures: spatial hierarchies and inductive biases

Convolutional Neural Networks (CNNs) are a cornerstone of computer vision for a reason: their architecture is built on strong inductive biases for spatial data. These biases include:

- Locality: The assumption that pixels close to each other are more strongly related than distant ones. Convolutions operate on small, local patches of an image, capturing local patterns efficiently.

- Translation Equivariance: A feature detected in one part of an image (e.g., a cat’s ear) can be detected in any other part. The use of shared weights in convolutional kernels naturally enforces this property, making the model highly parameter-efficient.

These biases guide the network to learn a spatial hierarchy of features, from simple edges to complex objects. While powerful, this strong bias means CNNs may be less effective for data that lacks a clear spatial or grid-like structure.

Attention and transformer paradigms: strengths and blind spots

The Transformer architecture, introduced in the seminal paper “Attention Is All You Need,” revolutionized natural language processing by removing the sequential processing constraints of recurrent neural networks. Its core mechanism, self-attention, allows the model to weigh the importance of all other elements in a sequence when processing a single element. This enables the modeling of long-range dependencies, a critical weakness in previous models.

The inductive bias of Transformers is weaker than that of CNNs; they assume very little about the data’s structure, instead learning relationships directly. This flexibility is their greatest strength, allowing them to excel on diverse data types beyond text, including images and time-series. However, this comes with blind spots. The computational complexity of self-attention is quadratic with respect to sequence length, making it resource-intensive for very long sequences. Furthermore, their weaker biases mean they often require significantly more data than CNNs to achieve comparable performance on tasks with strong spatial structure.

Graph neural approaches: encoding relational structure

Many real-world datasets are not sequences or grids but are best represented as graphs—collections of nodes and edges. Examples include social networks, molecular structures, and knowledge graphs. Graph Neural Networks (GNNs) are specifically designed to operate on this type of data. Their inductive bias is rooted in the relational structure of the graph. GNNs work via a process called message passing, where each node aggregates information from its neighbors. By stacking these layers, a node can incorporate information from increasingly larger neighborhoods, effectively learning a representation based on its position and context within the graph. This makes GNNs uniquely suited for tasks like node classification, link prediction, and graph classification.

Training dynamics and hands-on lessons

A well-chosen architecture is only the first step. The success of all neural networks hinges on the complex dynamics of the training process. Mastering this process requires a deep understanding of initialization, optimization, and regularization.

Initialization, optimization algorithms, and learning rate strategies

Training a deep neural network is a non-convex optimization problem, and where you start matters. Weight initialization schemes like Xavier/Glorot or He initialization are designed to prevent gradients from vanishing or exploding in the initial stages of training, ensuring a stable starting point. From there, an optimization algorithm navigates the loss landscape. While Stochastic Gradient Descent (SGD) with momentum remains a strong baseline, adaptive optimizers like Adam and its variants are widely used for their rapid convergence.

The single most important hyperparameter is the learning rate. A rate that is too high can cause the model to diverge, while one that is too low can lead to painfully slow convergence or getting stuck in suboptimal local minima. Modern strategies for 2025 and beyond will increasingly rely on dynamic learning rate schedules, such as cosine annealing with warm-up restarts, which systematically adjust the learning rate during training to balance exploration of the loss landscape with fine-tuning convergence.

Regularization, augmentation, and when they fail

Regularization techniques are essential for preventing a model from overfitting to the training data, thereby improving its ability to generalize to unseen examples. Common methods include:

- L1 and L2 Regularization: Adding a penalty to the loss function based on the magnitude of the model’s weights, discouraging overly complex models.

- Dropout: Randomly setting a fraction of neuron activations to zero during training, which forces the network to learn more robust and redundant features.

- Data Augmentation: Artificially expanding the training dataset by creating modified copies of existing data (e.g., rotating or cropping images).

However, these methods are not foolproof. Aggressive data augmentation can sometimes fail by altering key features, confusing the model (e.g., flipping a “6” might make it a “9”). Similarly, excessive dropout can hinder a model’s ability to converge, especially in smaller neural networks. The key is to apply these techniques judiciously, validating their impact on a separate hold-out dataset.

Interpreting and debugging models

As neural networks are increasingly deployed in high-stakes applications, understanding their decision-making process is no longer a luxury but a necessity. Model interpretability is crucial for debugging, building trust, and ensuring fairness.

Saliency maps, feature visualization, and counterfactuals

Several techniques have emerged to peer inside the “black box” of complex neural networks:

- Saliency Maps: These techniques, such as Grad-CAM, highlight the parts of an input (e.g., pixels in an image) that were most influential in the model’s final decision. They help answer the question: “What was the model looking at?”

- Feature Visualization: This method works by generating synthetic inputs that maximally activate a specific neuron or layer. It helps us understand what kind of concepts or patterns a particular part of the network has learned to detect.

- Counterfactual Explanations: A more advanced approach that seeks to answer: “What is the smallest change to the input that would change the model’s prediction?” This provides intuitive, case-based explanations for a model’s behavior, which is invaluable for debugging erroneous predictions.

Robust deployment checklist and monitoring

Moving a neural network from a research environment to production requires a disciplined engineering approach focused on performance, reliability, and maintenance.

Performance profiling, latency planning, and resource budgeting

Before deployment, a model must be rigorously profiled. Key metrics include:

- Inference Latency: How long does it take to get a prediction for a single input? This is critical for real-time applications.

- Throughput: How many predictions can the model make per second? This is vital for batch processing systems.

- Resource Consumption: What are the memory (RAM/VRAM) and computational (CPU/GPU) requirements?

These metrics inform resource budgeting and hardware selection. Techniques like quantization (reducing numerical precision) and pruning (removing unimportant weights) can significantly reduce model size and improve latency with minimal impact on accuracy, forming a core part of deployment strategies in 2025.

Data drift detection, retraining triggers, and versioning

A deployed model’s performance is not static; it will degrade over time as the real-world data it encounters diverges from the training data. This phenomenon is called data drift. A robust monitoring strategy for 2025 and beyond must include:

- Automated Drift Detection: Statistical methods to monitor the distributions of input features and model predictions to detect shifts.

- Retraining Triggers: Pre-defined thresholds for performance degradation or data drift that automatically trigger a model retraining pipeline.

- Model Versioning: A system to track all deployed models, their training data, and performance metrics. This is essential for reproducibility and for rolling back to a previous version if a new model underperforms.

Responsible design and governance considerations

The power of neural networks comes with significant responsibility. Practitioners and leaders must embed ethical considerations throughout the model lifecycle. This involves actively auditing training data for biases that could lead to unfair or discriminatory outcomes. It requires a commitment to transparency, using interpretability tools to explain model behavior to stakeholders. Governance frameworks should be established to ensure accountability, outlining processes for model validation, risk assessment, and human oversight, especially in sensitive domains like healthcare and finance. Responsible design is not an afterthought but a foundational component of building trustworthy AI systems.

Short reproducible case studies and annotated results

Consider a practical case: **predictive maintenance for industrial machinery using sensor data.**

- Problem: Predict equipment failure based on time-series data from multiple sensors (vibration, temperature, etc.).

- Architecture Choice: A Recurrent Neural Network (RNN) or a 1D-CNN would be a strong choice. An RNN is biased towards temporal sequences, while a 1D-CNN can effectively extract patterns from windows of time. A Transformer could also be used but may require more data.

- Training Consideration: The data is likely imbalanced (few failure events). A key strategy is to use a weighted loss function that penalizes misclassifying the rare “failure” class more heavily. Data augmentation could involve adding small amounts of noise to sensor readings to create more robust training examples.

- Interpretability Check: After training, use a saliency method like SHAP (SHapley Additive exPlanations) to identify which sensor readings are most predictive of failure. This can provide valuable engineering insights beyond just the prediction.

- Deployment Monitoring: Implement a system to monitor the statistical distribution of incoming sensor data. A significant shift could indicate a change in operating conditions or sensor malfunction (data drift), triggering an alert to re-evaluate the model.

Further reading and curated references

This whitepaper serves as a practical guide, but the field of Artificial Neural Networks is vast and rapidly evolving. For those seeking to deepen their understanding, we recommend the following foundational resources and starting points for further exploration:

- Deep Learning (Review Article): A comprehensive overview of the field by pioneers Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. An excellent starting point for understanding the broader context.

- Attention Is All You Need (Research Paper): The original paper that introduced the Transformer architecture, which has since become the state-of-the-art for a wide range of tasks.

- Learning representations by back-propagating errors (Research Paper): A foundational paper detailing the backpropagation algorithm that enables the training of deep, multi-layer neural networks.

Continuing education through new research papers, open-source project documentation (e.g., PyTorch, TensorFlow), and community discussions is essential for staying current in this dynamic field.