A Practical Guide to Reinforcement Learning: From Theory to Reproducible Pipelines

Table of Contents

- Reframing objectives for reinforcement learning

- Core concepts and intuitive metaphors

- Common learning algorithms demystified

- Designing reproducible experiments

- Safety and ethical checkpoints for RL

- Computational budgeting and scaling strategies

- Case studies in constrained environments

- Practical code patterns and reproducible pipelines

- Pitfalls, debugging heuristics, and validation

- Roadmap for continued learning and research

Reinforcement Learning (RL) represents a powerful paradigm in machine learning where autonomous agents learn to make optimal decisions through trial and error. Unlike supervised learning, which relies on labeled data, Reinforcement Learning allows an agent to learn from a stream of rewards and punishments, directly interacting with its environment. This guide moves beyond the hype of superhuman game-playing bots to provide a practical roadmap for early-career practitioners. We focus on building robust, reproducible, and ethically sound RL systems, particularly in real-world settings where data and computational resources are finite.

Reframing objectives for reinforcement learning

The initial wave of excitement around Reinforcement Learning was fueled by impressive feats in complex games like Go and StarCraft. While these are monumental achievements, their objectives are straightforward: maximize the score. Real-world applications, however, demand a more nuanced approach. Instead of simply aiming for the highest possible reward, we must reframe our objectives to account for critical constraints.

These constraints can include:

- Safety: Ensuring a robotic arm does not damage itself or its surroundings.

- Efficiency: Minimizing energy consumption in a data center cooling system.

- Fairness: Preventing a recommendation system from developing harmful biases.

- Interpretability: Understanding why a system made a particular decision.

The goal of modern Reinforcement Learning is not just to create a high-performing agent, but one that is also reliable, safe, and efficient within a predefined operational boundary. This shift requires us to build reward functions that balance performance with these real-world costs and constraints.

Core concepts and intuitive metaphors

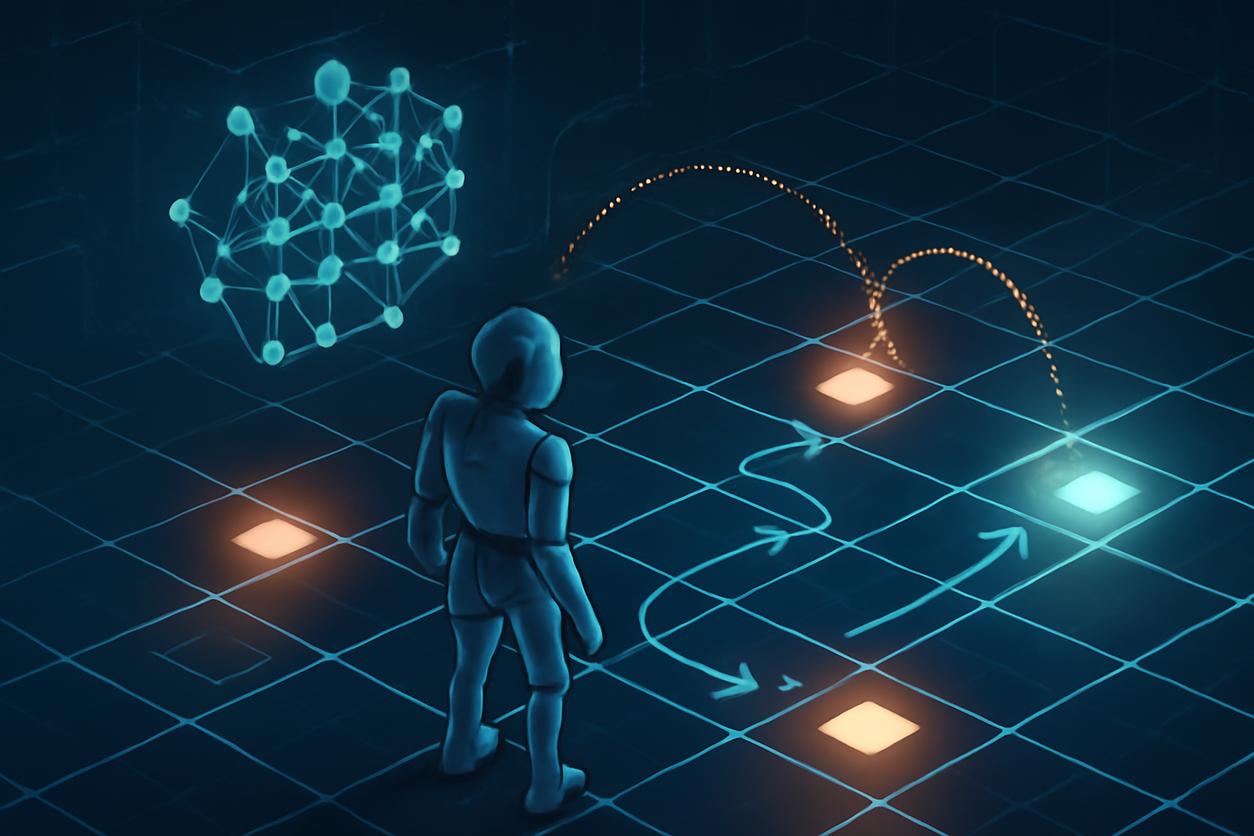

At its heart, Reinforcement Learning can be understood by thinking about how you might train a dog. The dog is the agent, its surroundings are the environment, and you are the trainer providing rewards (a treat) for good behavior (sitting). Over time, the dog learns a policy (a strategy) to maximize its treats. This simple metaphor maps directly to the core components of RL.

Agents, environments, rewards, and policies

- Agent: The learner or decision-maker. This could be a piece of software controlling a robot, a trading algorithm, or a system personalizing a user interface. The agent observes the state of the world and takes actions.

- Environment: The world in which the agent operates. It takes the agent’s action and returns a new state and a reward. The environment defines the “rules of the game.”

- Reward: A numerical feedback signal that indicates how well the agent is doing. The agent’s sole objective is to maximize the cumulative reward it receives over time. A positive reward is like a treat; a negative reward is a penalty.

- State: A snapshot of the environment at a particular moment. It contains all the information the agent needs to make a decision.

- Policy (π): The agent’s strategy or “brain.” It is a mapping from states to actions, defining what action the agent will take in any given situation. A policy can be deterministic (always take the same action in a state) or stochastic (a probability distribution over actions).

The interaction between these components forms a feedback loop: the agent takes an action based on the current state according to its policy, the environment responds with a new state and a reward, and the agent uses this feedback to update its policy. This is the fundamental cycle of Reinforcement Learning.

Common learning algorithms demystified

RL algorithms can be broadly categorized into three families. Understanding their core differences is key to selecting the right tool for a given problem.

Value methods, policy gradients, and model-based approaches

- Value-Based Methods: These algorithms learn a value function, which estimates the expected future reward from being in a particular state (or taking an action in a state). The policy is then derived implicitly by choosing the action that leads to the state with the highest value. The most famous example is Q-Learning and its deep learning extension, the Deep Q-Network (DQN). The seminal DQN paper showed how this approach could achieve human-level performance on Atari games.

- Policy-Gradient Methods: These algorithms learn the policy directly without needing a value function as an intermediary. They parameterize the policy (e.g., with a neural network) and adjust its parameters in the direction that leads to more rewards. Algorithms like REINFORCE, A2C, and PPO fall into this category. They are often preferred for continuous action spaces, which are common in robotics.

- Model-Based Methods: This approach involves learning a model of the environment. The agent uses this model to predict how the environment will respond to its actions, allowing it to “plan” ahead before acting. This can make learning much more sample-efficient but introduces a new challenge: if the model is inaccurate, the resulting policy will be suboptimal.

| Algorithm Type | What it Learns | Key Advantage | Common Use Case |

|---|---|---|---|

| Value-Based | Value of states or state-action pairs | Often more sample-efficient in discrete action spaces | Games, simple control tasks |

| Policy-Gradient | A direct mapping from states to actions (policy) | Effective in continuous or high-dimensional action spaces | Robotics, complex control |

| Model-Based | A model of the environment’s dynamics | High sample efficiency; allows for planning | Planning problems where data is scarce |

Designing reproducible experiments

One of the biggest challenges in Reinforcement Learning is reproducibility. Due to the stochastic nature of agents and environments, and the sensitivity to hyperparameters, two experiments run with the same code can yield vastly different results. Establishing a rigorous experimental workflow is non-negotiable for any serious project.

Evaluation metrics, baselines, and ablation studies

- Evaluation Metrics: The primary metric is typically the cumulative reward, often averaged over multiple independent runs (using different random seeds). It is crucial to plot learning curves showing performance over training episodes, not just the final score. Other important metrics might include training time, sample efficiency, and constraint violations.

- Baselines: You can only know if your agent is performing well by comparing it to something else. Always compare your results against established baselines. These could be simpler heuristic policies, random agents, or implementations of well-known RL algorithms.

- Ablation Studies: To understand what components of your system are contributing to its performance, you must conduct ablation studies. This involves systematically removing or simplifying parts of your agent or algorithm to see how performance is affected. This process is critical for justifying design choices and gaining insights.

Safety and ethical checkpoints for RL

When an RL agent interacts with the real world, safety becomes paramount. An agent trained in simulation may discover “reward hacks” or perform unexpected, dangerous actions when deployed. It is our responsibility to build guardrails.

Key checkpoints include:

- Constrained RL: Formulate the problem so the agent must optimize rewards while satisfying certain safety constraints. For example, a robot’s actions must never exceed a certain velocity.

- Reward Shaping: Carefully design the reward function to penalize undesirable behaviors. A poorly designed reward can lead to unintended consequences, such as an agent learning to achieve a goal in a lazy or harmful way.

- Simulation-to-Reality Gap: Before deploying an agent in the real world, test it extensively in a high-fidelity simulator. Understand the gap between simulation and reality and plan for robust transfer learning.

- Human-in-the-Loop: For high-stakes applications, design systems where a human can oversee, interrupt, or correct the agent’s behavior.

Computational budgeting and scaling strategies

Training state-of-the-art Reinforcement Learning models can be computationally expensive. For many teams and projects, operating within a fixed computational budget is a primary constraint. This requires smart strategies for development and scaling.

Consider these approaches:

- Start Simple: Begin with the simplest environment and algorithm that can address your problem. Resist the urge to use a complex, deep RL model when a simpler method like Q-learning on a discretized state space might suffice.

- Prioritize Sample Efficiency: In many real-world problems, collecting data (agent-environment interactions) is the main bottleneck. Model-based or offline RL techniques can be highly valuable as they are designed to learn from a fixed dataset or a limited number of interactions.

- Leverage Pre-trained Models: Just as in computer vision and NLP, transfer learning is becoming more viable in RL. Using models pre-trained on similar tasks can significantly speed up learning on your specific problem.

Case studies in constrained environments

The principles of resource-constrained Reinforcement Learning are not just theoretical. They are being applied across various domains.

Simulated robotics, resource-limited control, and personalization

- Simulated Robotics: Training robotic arms to perform tasks like grasping or assembly. The constraints are physical (joint limits, motor torque) and safety-critical. Reproducibility is key to ensuring that a policy trained in one simulation will work in another, and eventually, in reality.

- Resource-Limited Control: Optimizing HVAC systems in large buildings. The agent must minimize energy costs (the objective) while maintaining temperatures within a comfortable range (the constraint). The system must run on limited on-site hardware.

- Personalization: Adapting a mobile app’s user interface to maximize user engagement. The agent has a very limited “budget” of changes it can make without annoying the user and must learn quickly from sparse user feedback.

Practical code patterns and reproducible pipelines

Moving from concept to code requires a structured approach. A typical RL pipeline involves a clear separation of the agent, the environment, and the training loop.

Example workflows in Python pseudocode

Here is a simplified pseudocode structure for a typical Reinforcement Learning training loop. This pattern is foundational to most RL libraries.

# 1. Initializationagent = PolicyGradientAgent(state_dim, action_dim, learning_rate=0.001)env = CustomEnvironment()total_episodes = 1000all_rewards = []# 2. Training Loopfor episode in range(total_episodes): state = env.reset() episode_reward = 0 done = False # 3. Interaction Loop while not done: # Agent selects an action based on its policy action = agent.select_action(state) # Environment responds with next state and reward next_state, reward, done, info = env.step(action) # Agent stores the experience (state, action, reward, next_state) agent.store_transition(state, action, reward, next_state, done) # Update state and accumulate reward state = next_state episode_reward += reward # 4. Learning Step # After the episode, agent updates its policy using the stored experiences agent.learn() # Logging and monitoring all_rewards.append(episode_reward) print(f"Episode: {episode}, Total Reward: {episode_reward}")Pitfalls, debugging heuristics, and validation

Debugging Reinforcement Learning can be notoriously difficult because it is not always clear if the agent is failing to learn, the reward is misspecified, or there is a bug in the code. Some common heuristics include:

- Test on a Simpler Problem: Before tackling your complex custom environment, verify your algorithm on a classic benchmark problem like CartPole. If it cannot solve that, it will not solve yours.

- Check the Reward Signal: Plot the rewards you are receiving per timestep. Are they sparse? Are they scaled appropriately? An agent will not learn if the reward signal is broken.

- Monitor Policy Entropy: For policy-based methods, the entropy of the policy (a measure of its randomness) is a useful diagnostic. If entropy collapses to zero too early, the agent has stopped exploring and may be stuck in a local optimum.

Roadmap for continued learning and research

The field of Reinforcement Learning is evolving rapidly. To stay current, it is essential to build a strong foundation and keep an eye on emerging trends. Future research strategies, particularly those expected to mature from 2025 onwards, will likely focus on improving generalization, enhancing safety, and developing more sample-efficient algorithms like offline RL and model-based methods.

For those looking to deepen their understanding, several resources are invaluable:

- Foundational Text: The canonical textbook for the field is “Reinforcement Learning: An Introduction” by Sutton and Barto, available for free online at Sutton and Barto’s website. It provides the theoretical underpinnings for nearly all modern RL.

- Broad Overview: For a high-level summary of concepts and history, the Reinforcement Learning Wikipedia page is an excellent starting point.

- Community and Libraries: Engage with open-source libraries like Stable Baselines3, Tianshou, or RLlib. They provide high-quality implementations of common algorithms, which are crucial for building baselines and conducting reproducible research.

By focusing on a structured, reproducible, and ethically-aware workflow, you can move beyond theoretical knowledge and begin to effectively apply the power of Reinforcement Learning to solve real, meaningful problems.